Introduction

Every era has its buzzwords and the IT sector is no different: “multimedia” in the 80s, “interactive” in the 90s, and “web 2.0” in the last few years. And just when everyone’s starting to get comfortable with the most recent piece of terminology, along comes another one. The latest phrase du jour is in-the-cloud and, just like clouds themselves, it seems to be a rather nebulous concept. This article aims to clear up some of the confusion, as well as providing an explanation of the differences between cloud computing and in-the-cloud security.

How do clouds come into it?

If you’ve ever seen a network diagram that includes the Internet, you might have noticed that the standard symbol for the Internet is a cloud. This makes sense; you can’t see inside a cloud, and you can’t see exactly which computers are currently “in” the Internet. If there are clouds in the sky, you can guess it’s probably going to rain – you don’t need to know what’s happening inside the cloud. In the case of the Internet “cloud” all you need to know it is that’s it there, and that you can connect to it. You don’t need to know exactly what’s going on inside.

Cloud computing

The forerunner of cloud computing

Cloud computing existed long before Windows, MacOS and Linux began the march on users’ systems. However, back then the concept of cloud computing had a different name; it was referred to as “mainframe + terminal”. The mainframe was a very powerful server where all programs were run and all data was stored, while the terminal was a simple system that was only used to connect to the mainframe. Of course such systems had to have both a fast (and therefore expensive) mainframe and the appropriate network infrastructure. This was something that IBMs PC and home computers didn’t need – they were designed to provide everything in one machine and fairly quickly rendered the concept of mainframes obsolete.

Companies like Hewlett-Packard, Sun and Citrix have tried to keep the idea alive, however, replacing the terminal with what is now called a thin client, and the big mainframes with powerful out-of-the-box servers. These servers generally use standard PC technology (only with faster and more expensive processors, more RAM, more disk space than the average desktop computer) .Nevertheless, the whole concept is still dependent on having a powerful server and a fast network connection which is why such systems haven’t adopted by home users until now – they simply didn’t have access to such resources. However, these days it’s quite common for private households to have high-speed Internet access and download capability of several MB per second.

The infrastructure is almost ready

Although the mainframe and thin client concept can be seen as the forerunner of cloud computing, there is a difference. A company that deploys thin client technology usually has to buy its own server, provide a hosting location, electricity etc. In the case of cloud computing, though, all the hardware is bought by an in-the-cloud-provider, who then rents the available capacity to anyone who needs it. The interesting point is that customers don’t rent a server, but just a certain amount of memory or processor cycles. The customer doesn’t need to know if everything is hosted on a single computer or spread over several machines. Providers can even substitute a different machine for the hardware where a customer’s software is running without any noticeable effects, thanks to technologies designed to enable this kind of hot-swapping. And this is what the cloud is really about – as a customer you don’t have to worry about details; if you need more memory or more CPU power, you simply need to click the appropriate button to request these services.

One of the first major players in the area of cloud computing was Amazon which introduced the “Amazon Elastic Compute Cloud” concept in 2008. Recently Canonical – the company behind Ubuntu Linux – announced that Amazon’s service will be integrated into the upcoming Ubuntu 9.10 Linux next autumn. Other companies like Microsoft and Google have also entered the market, and will fight for their share of potential profits. There are also thousands of hosting companies which offer “virtual servers” to small businesses or individuals who need a cheap web server and this can be seen as a type of in-the-cloud service.

So the market is there; it’s going to grow fast and it will be the job of service providers to offer hardware that meets customer needs. Setting up these machines will be one of the biggest challenges: as soon as there’s significant take up for in-the-cloud services, more and more server centers will be needed. Such centers have to be geographically close to the customers because every kilometer increases the delay between client and server. Although the delay is minimal, high performance users such as gamers find even a delay of 200 milliseconds unacceptable as it detracts from the experience of real-time gaming. Once individuals become interested in using in-the-cloud” services, providers will have to buy a lot of extra hardware to meet the additional demand. Within the next decade or so there will be server centers in every town, with some possibly being located in multi-occupancy buildings which have 100+ inhabitants.

The benefits

Though most in-the-cloud providers currently target business customers, it’s likely that individuals will be the ones to make the concept a success by using it in large numbers. Businesses have dedicated IT staff, but for individuals, owning a computer can mean stress. First you have to buy a computer, something which sounds easier than it is – should you choose a laptop, which is highly portable, or does a cheaper and often faster desktop better meet your needs? In the world of cloud computing you can have both; you can buy a cheap thin client style laptop (which you can connect to a monitor and keyboard if you want) for less than 300 Euros. Then all you need to do is connect to your in-the-cloud-provider and enjoy as much performance and memory as you like (or can afford). Two years later, when normally you’d have to replace your out-of-date laptop, you can still use your thin client, because it’s the provider who offers the performance rather than the machine itself.

The stresses associated with owning a computer aren’t limited to hardware headaches; updating the operating system and applications, as well as patching vulnerabilities, can be a challenging task. Cloud computing takes care of all of these issues, making home computing cheaper, more secure, and more reliable.

Cloud computing will also benefit the content industry. In the past a number of methods have been used to prevent the illegal copying of music and movies, but none of these methods are problem-free. There have been cases of copy protected CDs not working on some CD players and Sony’s efforts to protect content resulted in a media scandal and withdrawal of the technology used. More and more MP3 shops are moving away from DRM protected material and offering unprotected music files instead. Cloud computing, however, will offer DRM a second lease of life, with content producers offering movies, games and music directly to the consumer. Such content will be designed to run within a cloud computing system, and it will require more time and effort to make unsanctioned copies of movies and music delivered in this way. Ultimately, this will result in fewer illegal copies and higher profits for the producers.

The risks

There are clear benefits to cloud computing, but there are also risks. Hardly a day goes by without a report of data being leaked or lost. Using in-the-cloud services means placing unprecedented trust in the provider. Is there any company you trust so much that you’re willing to give it full access not only to your emails, but also to all your private documents, bank account details, passwords, chat logs and photos of yourself and your family? Even if you trust the company, there’s no guarantee that your information won’t fall into the wrong hands. The key point: data leakage isn’t an issue that’s exclusive to cloud computing, but providers will have access to all of your data, rather than just select parts of it. So if a leak does occur, the consequences could be very wide-ranging.

Will the risks drive cloud computing out of business? This seems very unlikely, as cloud computing is convenient for users and profitable for providers. A complete refusal to use in-the-cloud services would lead to a company becoming just as isolated (and unable to do business) as a refusal to use email would do today. Rather than boycotting this technology, a more productive approach will involve the creation of new legislation and strict guidelines for providers, as well as technologies that will make it (almost) impossible for a provider’s employees to trawl customer data. At the moment, every company which wants to offer in-the-cloud services is free to do so, but the picture will be very different in ten years time. Providers will have to abide by standards if they want to offer such services.

The introduction of standards will also attract malware writers and hackers; this has been amply demonstrated by the standardization of PCs, with the overwhelming majority of them running Windows. Once cloud computing reaches critical mass, there will probably be a few highly specialized hackers who know how to break into cloud systems in order to steal or manipulate data, and they will be able to make a lot of money. There will also be scammers who don’t have any particular interest in technology but they’ll use today’s tricks such as 419 emails to get their hands ‘on victims’ money. And there will be cybercriminals creating and using Trojans, worms and other malware, and security companies protecting their customers from these threats. Overall, there won’t be that much change, except for the fact that everyone – users, providers, and cybercriminals alike – will be working in the cloud.

In-the cloud security

The main principle of cloud computing is being able to use computers resources without having physical access to the computer itself. In-the-cloud security is completely different: this is the use of outsourced security services that are offered in the cloud but the operating system is still running locally on the PC on your desk. In-the-cloud security comes in different flavors: for instance, Kaspersky Lab offers Kaspersky Hosted Security Services, which provide anti-spam and anti-malware services by filtering traffic for harmful content before it reaches the end user. The company’s personal products also offer in-the-cloud security in the form of the Kaspersky Security Network. The next part of this paper focuses specifically on the latter type of security service offered in desktop solutions.

Back in February 2006, Bruce Schneier blogged about security in the cloud. He wasn’t the only one to address the topic, and, although there was a lot of discussion as to how the concept should be implemented, it was generally agreed that implementation was a must, rather than an option. So why has it taken the AV industry more than two years to start implementing this technology in its products?

A question of necessity

Implementing new technologies not only takes time and money, but often has disadvantages. The most obvious disadvantage related to in-the-cloud services – whether cloud computing or in-the-cloud security – is that it’s essential to be online in order to enjoy the benefits. As long as existing technologies were able to provide protection against the latest malicious programs without the user having to be online, there was no need for change. As always, however, the changing threat landscape has led to changes in the antivirus industry.

The traditional way of detecting malicious software is by using so-called signatures. A signature is like a fingerprint – if it matches a piece of malware, the malware will be detected. However, there’s a difference between human fingerprints and signatures – a fingerprint only matches one individual, whereas a good signature doesn’t just identify a single unique file, but several modifications of that file. High quality signatures don’t only mean higher detection rates but also a decrease in the number of signatures needed, and, consequently, lower memory consumption. That is why the number of signatures provided by AV companies is usually much lower than the total number of files known to be malicious.

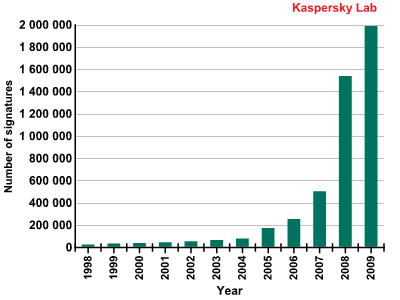

Clearly, good signatures can help keep signature databases small, but this doesn’t tackle the fundamental problem: the more malicious files there are, the more signatures will be needed. And as the graph above shows, the number of signatures has increased dramatically over the past few years in response to the explosion in the number of malicious programs.

An increased number of signatures doesn’t only result in higher memory consumption and additional download traffic, but also a decrease in scan performance. At the time of writing, the Kaspersky Lab signature databases used in personal products were 45 MB in size. If the trend illustrated above continues (and there’s no reason to believe it won’t), then these databases will swell to 1000 MB+ within the next three to four years. This is more memory than some computers have RAM; databases of this size would leave no space for an operating system, browser or games, and using a computer resources just to scan the machine itself, but not for work or play, isn’t a satisfactory solution.

So what’s the answer? Deleting old database records relating to MS-DOS malware wouldn’t help; the amount of memory gained would be smaller that the daily increase in new signatures. Although, as mentioned above, more efficient signatures can help, this is fighting the symptoms rather than the disease, and it won’t ultimately stop the increase in the number of database records.

In 2007 some AV companies realized that in-the-cloud security would be the only way out of the situation. The need to be online at all times was still seen as a drawback when compared to desktop security solutions, but ultimately, the benefits of this technology outweigh the disadvantages.

The advantages of in-the-cloud security

The whole idea behind in-the-cloud security as Kaspersky Lab defines it is that a potentially malicious file or website is checked online instead of being checked against local signatures stored on the computer itself. The easiest way to do this is by calculating the checksum (i.e. the digital fingerprint) of a file and then asking a designated server if a file with this checksum has already been identified as malicious. If the answer is “yes”, the user is shown a warning message and the malware is quarantined.

From a user’s point of view there’s no significant difference between this approach and the old approach, apart from improved computer performance. It doesn’t take a lot of processor resources to create a checksum for a file, which means doing this is several times faster than performing a complex signature based scan. There are also a number of other advantages which a user might not initially notice, but will still benefit from:

Lower memory consumption and smaller download footprint. As mentioned above, in a few years time classic signature databases will be pushing the limits of what users are willing to accept in terms of size. In-the-cloud solutions solve this problem easily: all the “fingerprints” are stored on the servers belonging to the AV company. The only thing stored on the end user’s machine is the AV software itself plus some data used for caching purposes. The server is only contacted if a new, as yet unidentified program is found on the local disk. If the user doesn’t install any new programs, there’s no need to download any new data. This is in complete contrast to today’s situation where signatures have to be constantly updated in case a new program (which might be malicious) is installed.

Better response times. Response times have always been a hot topic for the AV industry. It’s one thing to make a new signature available, but if this signature arrives several hours after a user opens an infected attachment, it’s often too late: the computer is probably already part of a botnet and will have downloaded additional malicious components for which no detection exists yet. This is why Kaspersky Lab began delivering almost hourly updates when many AV companies were still adhering to a daily update cycle. Nevertheless, the time lapse between the appearance of a new virus and the release of a signature could still be an hour or more. Proactive detection methods and client-side emulation technologies can compensate for this gap, but the problem persists. The impact of in-the-cloud security is clear; as the signature check is conducted on demand and in real time, the response time is significantly better. As soon as a file is identified by an analyst as being malicious, this information is made available to the client, bringing the response time down to minutes or even seconds.

It’s not just signatures for Trojans, viruses and worms that can be transmitted using in-the-cloud technology, but almost everything that forms a part of regular signature updates: URLs of dangerous websites, titles and keywords that appear in the latest spam mailings, and also complete program profiles that can be used by host intrusion prevention systems (HIPS) such as the one in Kaspersky Internet Security 2009. This technology isn’t restricted to PCs either. It also makes sense to use such technology to protect mobile devices, particularly as smartphones don’t have as much RAM as a PC, and therefore every byte counts. Most AV solutions for mobile phones focus on detecting mobile threats, as detecting all malware that targets Windows XP and Vista would consume too many resources. In-the-cloud technology could make this issue a thing of the past.

Two-way communication

It’s obvious that in-the-cloud systems can help tell a customer if a file on his machine is infected or not – the client machine queries, the server answers. However, depending on how the technology is implemented, the process can also work the other way round, with customers helping the AV company to identify and detect new threats. Supposing a file is analyzed on the client machine using emulation or proactive defense technologies. The conclusion is that the file is malicious. The file can then be uploaded for further investigation by the AV company’s analysts. Of course, this means the customer has to share the file itself, which s/he may not be willing to do. However, there’s another way of doing this: instead of sending a binary file, the client software could simply send the fingerprint of the file together with details (such as file size, threat classification etc.) from the modules which performed the analysis. If a new worm is spreading fast, the analysts at the AV company see there’s a new file which was flagged as suspicious and which suddenly appeared on thousands of computers. It’s then up to the analysts to determine if this is a new malicious file. If they come to the conclusion that there’s a genuine threat, adding detection is easy: the fingerprint for the file already exists and simply has to be moved into the detection database to which client computers send their requests.

There’s no such thing as a free lunch

Despite all the advantages of in-the-cloud security, there are also some drawbacks. The example above shows how adding detection on the basis of statistical monitoring can be an effective way of combating sudden outbreaks. However, it dramatically increases the risk of false positives. Suppose a new version of a popular shareware program is released; news will spread quickly, and very soon lots of people will be downloading the software. If the program affects system files, isn’t signed, and perhaps downloads other executables in order to update itself, it’s likely to get flagged as malicious by an automated in-the-cloud system. A few second later, this would result in thousands of false positives around the world. Of course, this wouldn’t happen if a human analyst had looked at the program, but this would take time, negating the potential benefit of rapid detection. Although it would be possible to fix the false positive in a matter of seconds (in contrast to a false positive in a classic signature database which remains in place until the next update is downloaded) there would still be negative consequences. People don’t like false positives, and if an individual notices that in-the-cloud security leads to increased false alarms, s/he is likely to disable the feature or move to another AV company which still employs the classic approach. To prevent this, AV companies need to set up and maintain their own collections of files which are known to be clean. If a new patch or program is released, the AV company has to analyze and allowlist it very quickly before their customers start downloading it.

Moving into the cloud therefore means a lot of additional work for AV vendors. Apart from actively maintaining the collection of clean files, the company’s servers have to be absolutely stable 24 hours a day. Of course, this has always been the expectation of customers, as offline servers can’t deliver updates. Nonetheless, the classic approach will work with signatures that are several hours old even though detection rates will be lower. The cloud approach differs here; customers are left unprotected during server downtime, as the whole concept is based around on-demand and real time communication. In the case of server downtime, heuristics would have to be used in combination with powerful HIPS technology in order to ensure customers remain protected.

What does the future hold?

Kaspersky Lab was among the pioneers of in-the-cloud security with the release of KIS 2009 and the rollout of the associated Kaspersky Security Network. Many security companies have now started to implement the in-the-cloud approach in their products but it should be stressed that the industry overall is still at the initial stages of harnessing the full power of this technology. The situation is comparable to the situation in the world of cars: although electric cars will, in the long run, come to replace petrol and diesel cars, at the moment most of the cars which are advertised as electric are actually hybrids. The IT world is usually quicker to innovate than other industries, but nevertheless, it’s likely to be two or three years before in-the-cloud security fully replaces the signature based detection methods used today.

Conclusion

By now, the difference between cloud computing and in-the-cloud security should be clear. It will be a couple of years before cloud computing really takes off as companies will have to get used to the idea of sharing all of their data with service providers.

AV products which implement in-the-cloud technology have already been released and there seems little doubt that by the end of the year, this technology will be widely accepted. As time goes on, the two approaches will merge, with individuals and organizations using cloud computers protected by in-the-cloud security services. Once this happens, the Internet will be as essential to daily activity as electricity is today.

Clear skies ahead: cloud computing and in-the-cloud security