Unlike conventional World Wide Web technologies, the Tor Darknet onion routing technologies give users a real chance to remain anonymous. Many users have jumped at this chance – some did so to protect themselves or out of curiosity, while others developed a false sense of impunity, and saw an opportunity to do clandestine business anonymously: selling banned goods, distributing illegal content, etc. However, further developments, such as the detention of the maker of the Silk Road site, have conclusively demonstrated that these businesses were less anonymous than most assumed.

Intelligence services have not disclosed any technical details of how they detained cybercriminals who created Tor sites to distribute illegal goods; in particular, they are not giving any clues how they identify cybercriminals who act anonymously. This may mean that the implementation of the Tor Darknet contains some vulnerabilities and/or configuration defects that make it possible to unmask any Tor user. In this research, we will present practical examples to demonstrate how Tor users may lose their anonymity and will draw conclusions from those examples.

How are Tor users pinned down?

The history of the Tor Darknet has seen many attempts – theoretical and practical – to identify anonymous users. All of them can be conditionally divided into two groups: attacks on the client’s side (the browser), and attacks on the connection.

Problems in Web Browsers

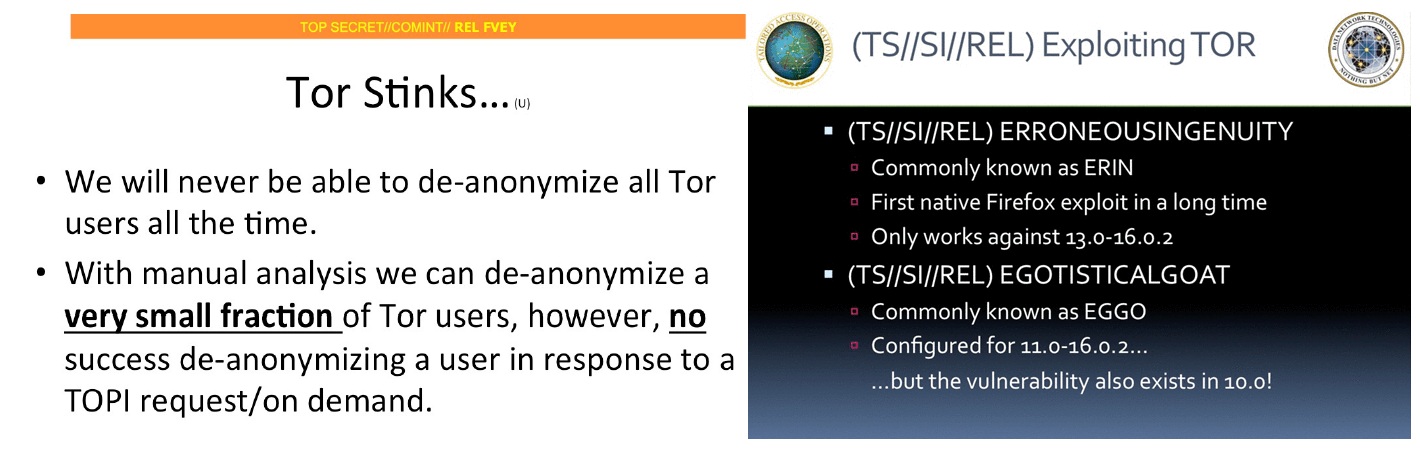

The leaked NSA documents tell us that intelligence services have no qualms about using exploits to Firefox, which was the basis for Tor Browser. However, as the NSA reports in its presentation, using vulnerability exploitation tools does not allow permanent surveillance over Darknet users. Exploits have a very short life cycle, so at a specific moment of time there are different versions of the browser, some containing a specific vulnerability and other not. This enables surveillance over only a very narrow spectrum of users.

The leaked NSA documents, including a review of how Tor users can be de-anonymized (Source: www.theguardian.com)

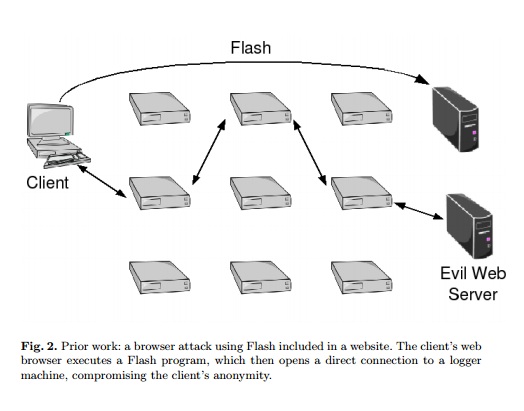

As well as these pseudo-official documents, the Tor community is also aware of other more interesting and ingenuous attacks on the client side. For instance, researchers from the Massachusetts Institute of Technology established that Flash creates a dedicated communication channel to the cybercriminal’s special server, which captures the client’s real IP address, and totally discredits the victim. However, Tor Browser’s developers reacted promptly to this problem by excluding Flash content handlers from their product.

Flash as a way to find out the victim’s real IP address (Source: http://web.mit.edu)

Another more recent method of compromising a web browser is implemented using the WebRTC DLL. This DLL is designed to arrange a video stream transmission channel supporting HTML5, and, similarly to the Flash channel described above, it used to enable the victim’s real IP address to be established. WebRTC’s so-called STUN requests are sent in plain text, thus bypassing Tor and all the ensuing consequences. However, this “shortcoming” was also promptly rectified by Tor Browser developers, so now the browser blocks WebRTC by default.

Attacks on the communication channel

Unlike browser attacks, attacks on the channel between the Tor client and a server located within or outside of the Darknet seem unconvincing. So far most of the concepts were presented by researchers in laboratory conditions and no ‘in-the-field’ proofs of concept have been yet presented.

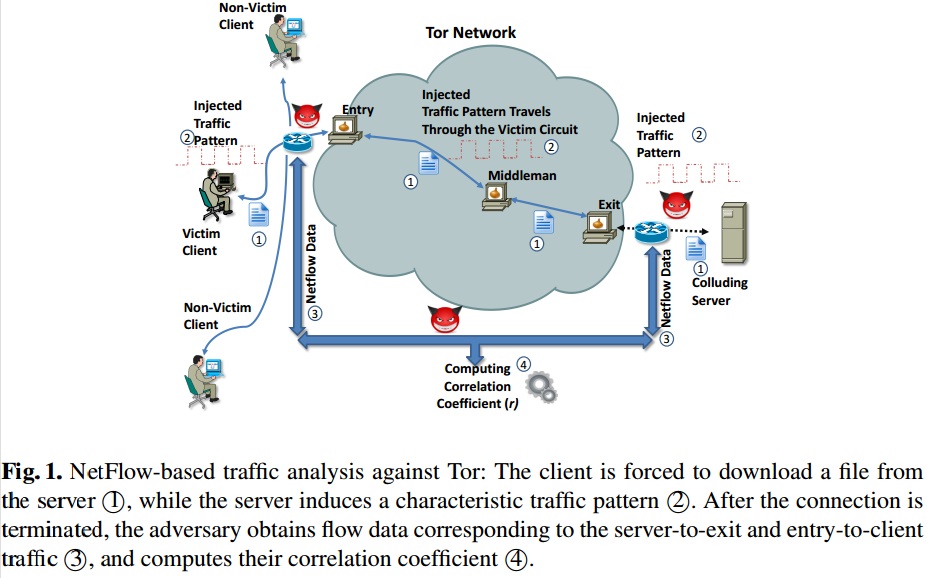

Among these theoretical works, one fundamental text deserve a special mention – it is based on analyzing traffic employing the NetFlow protocol. The authors of the research believe that the attacker side is capable of analyzing NetFlow records on routers that are direct Tor nodes or are located near them. A NetFlow record contains the following information:

- Protocol version number;

- Record number;

- Inbound and outgoing network interface;

- Time of stream head and stream end;

- Number of bytes and packets in the stream;

- Address of source and destination;

- Port of source and destination;

- IP protocol number;

- The value of Type of Service;

- All flags observed during TCP connections;

- Gatway address;

- Masks of source and destination subnets.

In practical terms all of these identify the client.

De-anonymizing a Tor client based on traffic analysis

(Source: https://mice.cs.columbia.edu)

This kind of traffic analysis-based investigation requires a huge number of points of presence within Tor, if the attacker wants to be able to de-anonymize any user at any period of time. For this reason, these studies are of no practical interest to individual researchers unless they have a huge pool of computing resources. Also for this reason, we will take a different tack, and consider more practical methods of analyzing a Tor user’s activity.

Passive monitoring system

All residents of the network can share their computing resources to set up a Node server. A Node server is a nodal element in the Tor network that serves as an intermediary in a network client’s information traffic. Because exit nodes are an end link in traffic decryption operations, they may become a source that can leak interesting information.

This opens up the possibility of collecting assorted information from the decrypted traffic. For instance, the addresses of onion resources can be extracted from HTTP headers. Here it is possible to avoid the internal search engines and/or website catalogs, as these may include irrelevant information about inactive onion-sites.

However, this passive monitoring does not enable us to de-anonymize a user in the full sense of the word, because the researcher can only analyze those data network packets that the users make available ‘of their own will’.

Active monitoring system

To find out more about a Darknet denizen we need to provoke them into giving away some data about their environment. In other words, we need an active data collection system.

An expert at Leviathan Security discovered a multitude of exit nodes and presented a vivid example of an active monitoring system at work in the field. The nodes were different from other exit nodes in that they injected malicious code into that binary files passing through them. While the client downloaded a file from the Internet, using Tor to preserve anonymity, the malicious exit node conducted a MITM-attack and planted malicious code into the binary file being downloaded.

This incident is a good illustration of the concept of an active monitoring system; however, it is also a good illustration of its flipside: any activity at an exit node (such as traffic manipulation) is quickly and easily identified by automatic tools, and the node is promptly denylisted by the Tor community.

Let’s start over with a clean slate

HTML5 has brought us not only WebRTC, but also the interesting tag ‘canvas’, which is designed to create bitmap images with the help of JavaScript. This tag has a peculiarity in how it renders images: each web-browser renders images differently depending on various factors, such as:

- Various graphics drivers and hardware components installed on the client’s side;

- Various sets of software in the operating system and various configurations of the software environment.

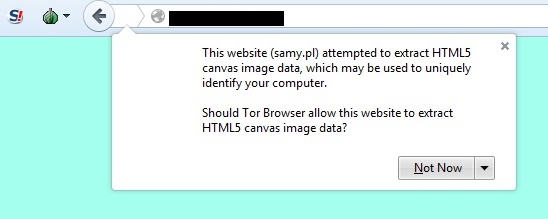

The parameters of rendered images can uniquely identify a web-browser and its software and hardware environment. Based on this peculiarity, a so-called fingerprint can be created. This technique is not new – it is used, for instance, by some online advertising agencies to track users’ interests. However, not all of its methods can be implemented in Tor Browser. For example, supercookies cannot be used in Tor Browser, Flash and Java is disabled by default, font use is restricted. Some other methods display notifications that may alert the user.

Thus, our first attempts at canvas fingerprinting with the help of the getImageData()function that extracts image data, were blocked by Tor Browser:

However, some loopholes are still open at this moment, with which fingerprinting in Tor can be done without inducing notifications.

By their fonts we shall know them

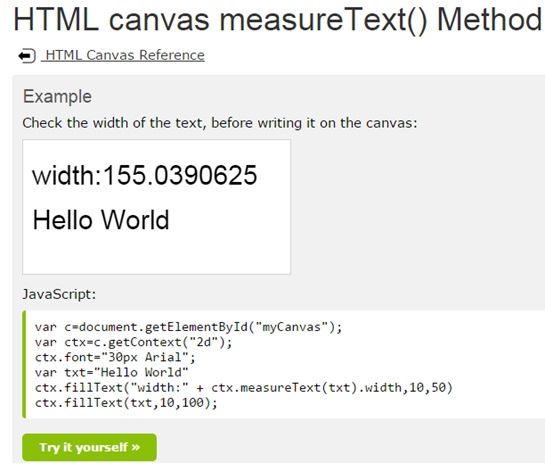

Tor Browser can be identified with the help of the measureText()function, which measures the width of a text rendered in canvas:

Using measureText() to measure a font size that is unique to the operating system

If the resulting font width has a unique value (it is sometimes a floating point value), then we can identify the browser, including Tor Browser. We acknowledge that in some cases the resulting font width values may be the same for different users.

It should be noted that this is not the only function that can acquire unique values. Another such function is getBoundingClientRect(),which can acquire the height and the width of the text border rectangle.

When the problem of fingerprinting users became known to the community (it is also relevant to Tor Browser users), an appropriate request was created. However, Tor Browser developers are in no haste to patch this drawback in the configuration, stating that denylisting such functions is ineffective.

Tor developer’s official reply to the font rendering problem

Field trials

This approach was applied by a researcher nicknamed “KOLANICH”. Using both functions, measureText() and getBoundingClientRect(), he wrote a script, tested in locally in different browsers and obtained unique identifiers.

Using the same methodology, we arranged a test bed, aiming at fingerprinting Tor Browser in various software and hardware environments.

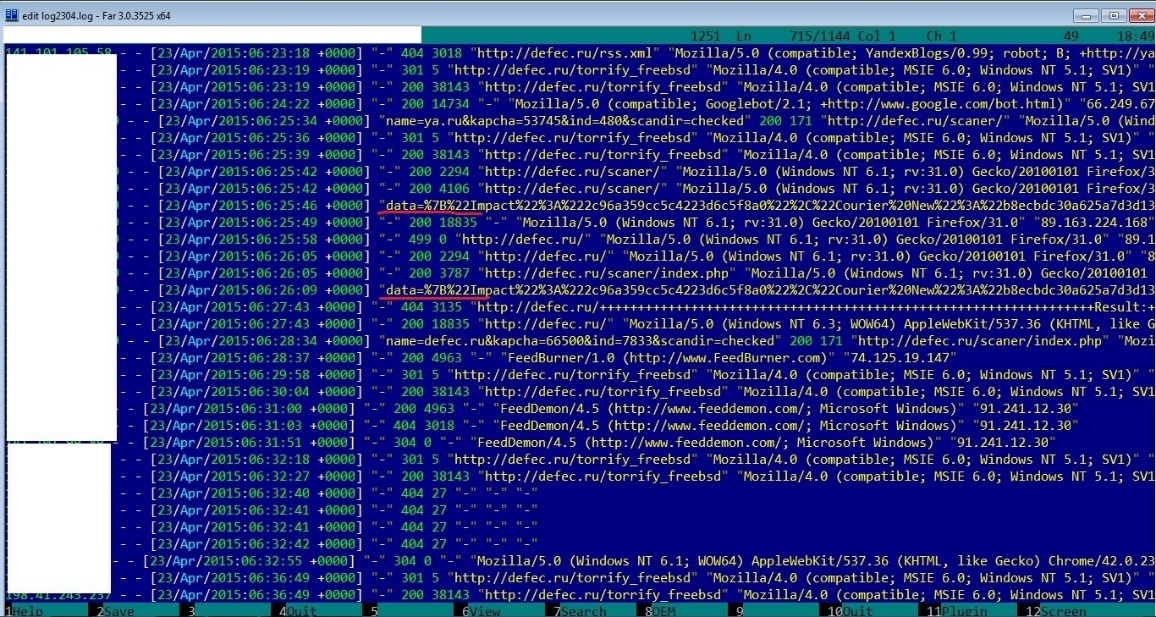

To address this problem, we used one author’s blog and embedded a JavaScript that uses the measureText() and getBoundingClientRect()functions to measure fonts rendered in the web browser of the user visiting the web-page. The script sends the measured values in a POST request to the web server which, in turn, saves this request in its logs.

A fragment of a web-server’s log with a visible Tor Browser fingerprint

At this time, we are collecting the results of this script operating. To date, all the returned values are unique. We will publish a report about the results in due course.

Possible practical implications

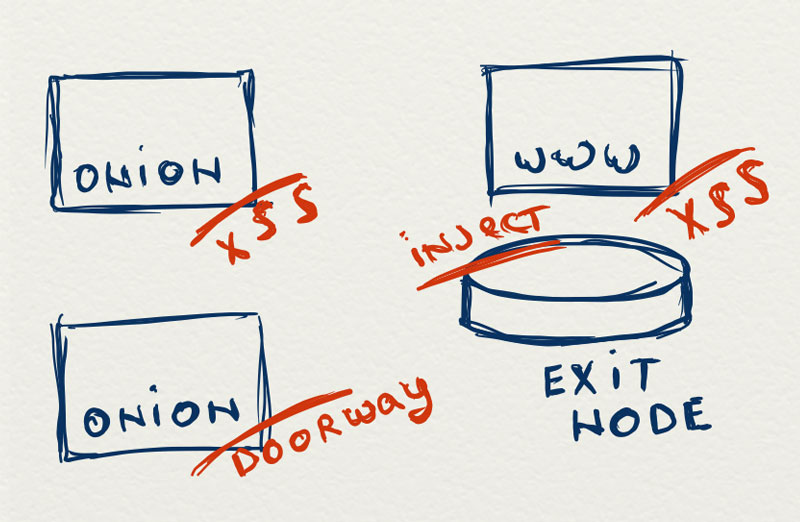

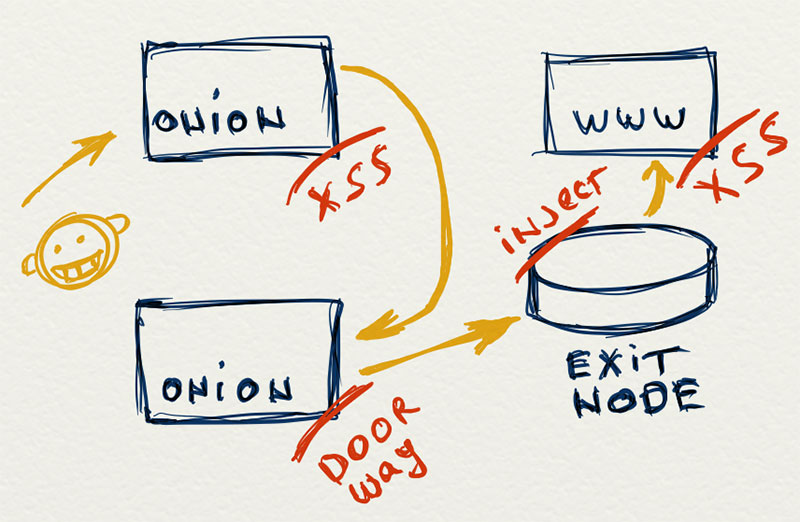

How can this concept be used in real world conditions to identify Tor Browser users? The JavaScript code described above can be installed on several objects that participate in data traffic in the Darknet:

- Exit node. A MITM-attack is implemented, during which JavaScript code is injected into all web pages that a Darknet resident visits in the outside Web.

- Internal onion resources and external web sites controlled by the attackers. For example, an attacker launches a ‘doorway’, or a web page specially crafted with a specific audience in view, and fingerprints all visitors.

- Internal and external websites that are vulnerable to cross-site scripting (XSS) vulnerabilities (preferably stored XSS, but this is not essential).

Objects that could fingerprint a Tor user

The last item is especially interesting. We have scanned about 100 onion resources for web vulnerabilities (these resources were in the logs of the passive monitoring system) and filtered out ‘false positives’. Thus, we have discovered that about 30% of analyzed Darknet resources are vulnerable to cross-site scripting attacks.

All this means that arranging a farm of exit nodes is not the only method an attacker can use to de-anonymize a user. The attacker can also compromise web-sites and arrange doorways, place a JavaScript code there and collect a database of unique fingerprints.

The process of de-anonymizing a Tor user

So attackers are not restricted to injecting JavaScript code into legal websites. There are more objects where a JavaScript code can be injected, which expands the number of possible points of presence, including those within the Darknet.

Following this approach, the attacker could, in theory, find out, for instance, sites on which topics are of interest to the user with the unique fingerprint ‘c2c91d5b3c4fecd9109afe0e’, and on which sites that user logs in. As a result, the attacker knows the user’s profile on a web resource, and the user’s surfing history.

In place of a conclusion

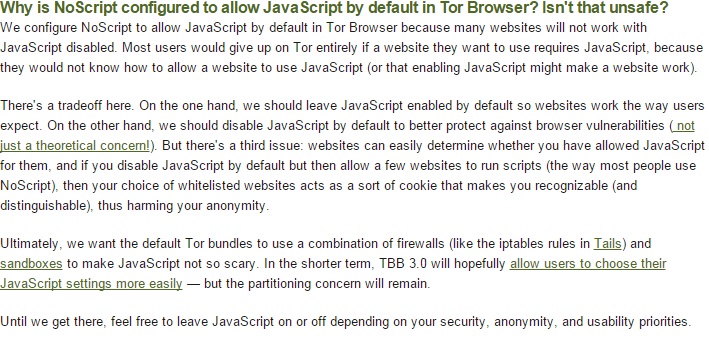

At Tor project’s official website, the developers posted an answer to the question “why is JavaScript allowed by Tor Browser?”:

Tor Browser’s official answer to a question about JavaScript

It appears from this answer that we should not expect the developers to disable JavaScript code in TorBrowser.

The TOR exit node research is purely theoretical and we haven’t setup any live servers during the research; the tests have been done in a virtual Darknet disconnected from the Internet.

Uncovering Tor users: where anonymity ends in the Darknet

Jeremy Quadri

To think the NSA has been exploiting the Darknet. I feel for the millions of people fooled into thinking that the Darknet would completely shield their identity.

rj

The Navy built Tor. What were your expectations for privacy?

kye rosendale

TOR was originally designed so that each user, would act as a relay . and thereby slowly decrypt the traffic on its way to the client. This means that injecting the poisoned code can only take place at one of these “relays” . It cannot be injected at the source as that is where the encryption is at its strongest.