One of the most complex yet effective methods of gaining unauthorized access to corporate network resources is an attack using forged certificates. Attackers create such certificates to fool the Key Distribution Center (KDC) into granting access to the target company’s network. An example of such an attack is the Shadow Credentials technique, which lets an attacker sign in under a user account by modifying the victim’s msDS-KeyCredentialLink attribute and adding an authorization certificate to it. Such attacks are difficult to detect because, instead of stealing credentials, the cybercriminals use legitimate Active Directory (AD) mechanisms and configuration flaws.

Nevertheless, it is possible (and necessary) to counter attacks that use forged certificates. Having analyzed the practical experience of our MDR service, I identified several signs of such attacks inside the network and developed a Proof-of-Concept utility capable of finding artifacts in AD, as well as a number of detection logic rules that can be added to SIEM. But first a few words about the quirks of certificate-based Kerberos authentication.

Kerberos authentication in AD and implementation quirks

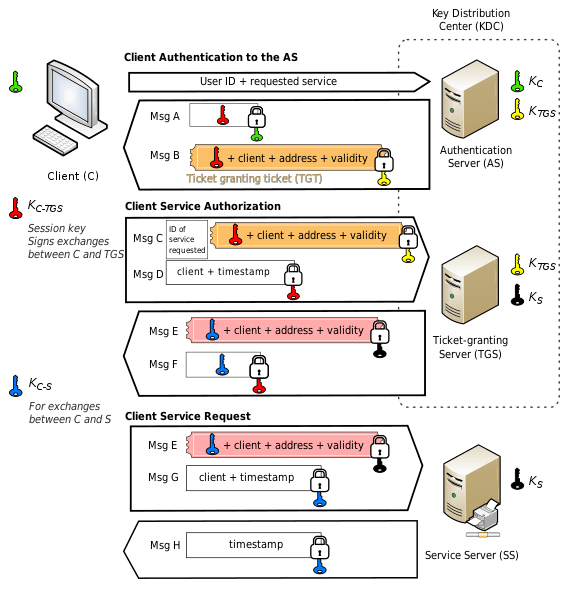

In modern corporate networks based on Active Directory, resource management is performed by the Kerberos protocol. Users can access any service (object) inside the network only if they can provide this object with a ticket issued by the KDC (Msg E in the figure below). The KDC component that issues service tickets is called the Ticket Granting Server (TGS). Moreover, the user receives a TGS ticket from the KDC only if they have a Ticket Granting Ticket (TGT) (Msg B in the figure below). Essentially, a TGT is proof of successful user authentication, usually by password.

Kerberos authentication scheme. Source: https://en.wikipedia.org/wiki/Kerberos_(protocol)

However, there is a way to get a TGT without knowing the password — using a certificate. For this to work, the KDC must trust the provided certificate, and the certificate must relate to the subject requested in the TGT. This part of Kerberos, called Public Key Cryptography for Initial Authentication (PKINIT), makes it quite easy to set up authentication if there is a (Certificate Authority) in the corporate network that issues certificates for domain users. But there is an alternative way.

For example, to take advantage of Microsoft Hello for Business features, such as PIN-based authorization or face recognition, the device from which you’re signing in must have its own certificate in AD so that the KDC can issue a TGT based on this certificate. However, not all networks with Active Directory have a Certificate Authority. This is the reason for inventing the msDS-KeyCredentialLink attribute, where the certificate can be written. The KDC will trust this certificate and issue a TGT. That’s a really good solution that extends the capabilities of Microsoft Active Directory.

However, based on the above logic, the subject that writes the msDS-KeyCredentialLink attribute to some object will also be able to get a ticket for this object. Therein lies the problem.

How the attack unfolds

Let’s illustrate one of the possible attack scenarios:

- The subject logan_howard, having write permissions for any attribute in the AD domain, writes a public key to the msDS-KeyCredentialLink attribute for a domain controller object (ad-gam$), using the Whisker

- The subject receives the TGT (using the Rubeus toolkit) issued to the domain controller.

- On presenting this TGT, the subject gets a TGS ticket to synchronize password information in the domain (MS-DRSR: Directory Replication Service (DRS) Remote Protocol).

- Acting as this subject, the attacker “synchronizes” the hash from the domain administrator account (Administrator) to impersonate the administrator for the purpose of gaining access to data and moving laterally inside the corporate network. This attack is called DCSync and uses mimikatz.

Where to look for artifacts

Let’s focus not on how to get the KDC to trust a particular certificate, including stolen or forged ones, but on what happens when the TGT is issued. This triggers Event 4768 on the domain controller: A Kerberos authentication ticket (TGT) was requested. This event may contain artifacts from the certificate used for authentication, with three fields: CertIssuerName, CertSerialNumber and CertThumbprint. These fields are what we’ll look at.

What tools to use

For simplicity and convenience, we’ll handle all events in the Kibana interface of the ELK cluster. By default, Logstash actually knows how to convert the bit fields of Event 4768 into an array of values specific to a ticket in the list. This also makes the search much faster and smoother. I recommend using the handy set of Docker configurations to get your ELK lab up and running quickly. Plus the official WinLogBeat setup guide.

What to say about these events

On the test bench we created several TGT request events based on a forged certificate that we generated using Whisker. Here’s what these events look like in the test environment:

Within the framework of the MDR service, we observe several hundred thousand certificate-based ticket request events a week. On their basis, we can use this fairly broad sample to identify some patterns:

- A significant portion of the events is made up of certificate-based ticket requests for Microsoft Azure Active Directory (the “Azure” line in the aggregation in the screenshot below). These events are of no interest to us — they can be easily filtered using a regular expression with the value of the CertIssuerName field in the Kibana interface.

| CertIssuerName.raw:/S\-1\-(5\-21|12\-1)\-([0-9]{8,10}\-){3}[0-9]{4,10}\/[a-f0-9]{8}\-([a-f0-9]{4}\-){3}[a-f0-9]{12}\/login\.windows\.net\/[a-f0-9]{8}\-([a-f0-9]{4}\-){3}[a-f0-9]{12}.*/ |

- There are also many events for certificates used by Windows Hello for Business (the “Hello4B self gen” line). In this case, the certificate data is written to the msDS-KeyCredentialLink attribute, and the key is programmatically generated (NCRYPT_IMPL_SOFTWARE_FLAG). They typically have a name that begins with “CN=” and a two-digit serial number, usually 01.

| CertIssuerName.raw:CN=* AND CertSerialNumber.raw:01 |

- If the computer has a key stored in Trusted Platform Module (the “TPM enrolled” line), , then the certificate that uses this key can likewise be described by regular expressions, hence we are not interested in it.

| CertSerialNumber.raw:/[a-f0-9]{32}/ AND CertThumbprint.raw:/[a-f0-9]{40}/ |

- But perhaps the most common case is using certificates issued by Microsoft Certificate Authority (the “Windows server CS role issued” line). This service can be enabled on a computer running a server version of Microsoft Windows. It’s worth noting here that if you monitor your local infrastructure yourself and are not an MSSP, you’ll find it far easier to filter out this case by the CertIssuerName value — the name of your CA server (most likely the only one for each domain in the forest). Indeed, even large corporate networks have a fairly small number of CAs able to issue certificates. But even if you are an MSSP, it’s still won’t be too much trouble to figure out the names of all client PKI servers in order to filter them out. Now for some patterns in other fields.

| CertSerialNumber.raw:/[a-f0-9]{38}/ AND CertThumbprint.raw:/[a-f0-9]{40}/ |

- Here, too, there may be third-party PKI implementations whose certificates are trusted by Kerberos servers in issuing tickets. For example, our monitoring came across specialized software developed by the firm Lanaco (no more than ten requests in 30 days). We can filter that as well.

Using real data, let’s see what queries we can filter out. For this, we can build the following aggregation using the regular expressions described above:

Aggregation of certificate-based ticket request events in the Kaspersky MDR service

Take a look at the “Rest” line, which contains the remaining unfiltered events (13 of them), shown in detail. Pay attention to the CertIssuerName field; see the details below.

Expanded list of unfiltered certificate-based ticket request events

Exploring the Whisker code

As I mentioned, in our example the certificate was generated in the Whisker utility itself with default parameters. See here for a description of the procedure for generating a self-signed certificate.

As we can see, Whisker tries to pass of its certificates as Windows Hello for Business certificates (in the case of programmatic generation, a key pair). However, the original certificates (when a Windows PC independently generated a certificate to use this functionality) contain an error: the Distinguished Name (DA) notation in the CertIssuerName field uses the format “CN=…”. The attackers’ toolkit is free of this error, which is suspicious. The second and third lines can be compared with the data from the test bench, but in the MDR product system (also see below).

We can add a Painless script directly to Kibana that finds all 4768 events resulting from case-insensitive matches between CertIssuerName and TargetAccountName.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

{ "query": { "bool": { "filter": { "script": { "script": { "lang": "painless", "source": "doc['CertIssuerName.raw'].value.equals(doc['TargetAccountName.raw'].value.toLowerCase())" } } } } } } |

There are ten such events, all of which relate to the use of the Whisker utility.

Exploring fields with ticket flags

Now let’s consider the winlog.event_data.TicketOptionsDescription field in events from the test bench over an arbitrary time interval during which both forged and legitimate TGT requests occur.

What’s striking is the absence of the name-canonicalize flag, which plays an important role in the Kerberos infrastructure. The thing is that a service or account can have multiple primary names. For example, if a host is known by multiple names, services based on it may have multiple Service Principal Names (SPNs). To relieve the client of having to request a ticket for each name, the KDC can provide it with mapping information during the credential retrieval process. This functionality is requested when the name-canonicalize flag is enabled. If the “canonicalize” option is set, the KDC can modify the names and SPNs of both the client and the server in the response and the TGT. But in our case this flag is missing, which, as mentioned, is suspicious. Let’s find all tickets without this flag but requested using PKINIT (certificate-based). We create a request based on Kaspersky MDR product data.

The result, as we see, is Whisker + Rubeus activity (for the last 30 days) on our bench (AD-Gam host) and the work of my colleague (the others) in testing a group of vulnerabilities in the AD CS settings, which we combined under the general name ADCS ESC or Certified Pre-Owned. In addition, there is one false positive filtered by certificate name and one incident sent to the client. That’s not a bad conversion.

Let’s look at the example of Rubeus to see why the name-canonicalize flag is not set in ticket requests.

It turns out that Rubeus does not set this flag on purpose. Likewise with Impacket, the de facto standard toolkit for security analysts who work with Kerberos (and not only). This explains why our search for flagless tickets also turned up the work of my colleague, who tested ESC techniques (using a toolkit based on Impacket). Such utilities are plentiful due to the simplicity of the code and the popularity of the language.

What about the msDS-KeyCredentialLink attribute itself?

We can compare two attributes: one set legitimately during Hello for Business configuration, the other set by Whisker. There is a difference between them. When comparing these attributes, I in fact wrote a tool that lets you find artifacts from an illegitimate attribute setting, for example, as a result of using Whisker.

You can download and use this utility yourself in the development environment; when debugging, try to find and compare the key differences in the “good” and “bad” attributes.

Things to pay attention to:

- Does the msDS-KeyCredentialLink attribute have a DeviceId (GUID format)? If it does, plus there’s no object with this ID in the domain, that’s suspicious. If there is such an object, and it belongs to the Azure AD connector, that’s likely to be a legitimate case.

- The Flags field does not contain MFANotUsed. But typically does in a legitimate case.

- KeyMaterial has a length other than 270 bytes — these are exactly the kind of artifacts that Whisker leaves behind.

- KeyApproximateLastLogonTimeStamp and KeyCreationTime are almost identical. However, this indicator is less reliable and better not used.

Wrap-up

The above-described attacks are relatively effective, but they can be detected when a forged certificate is used. Knowledge of the infrastructure (ideally including a list of all active keys) and monitoring will assist the security expert in this. Also of great help is the ability to spot common patterns and artifacts of attacks using forged certificates, and my utility will simplify the search process.

Anomaly detection in certificate-based TGT requests