This article provides an overview of methods used to detect malicious code; of the functional (and to some extent chronological) connections between these methods; and of their technological and applied features. Many of the technologies and principles covered in this article are still current today, not only in the antivirus world, but also in the wider context of computer security systems. However, some of the technologies used by the antivirus industry – such as unpacking packed programs and streaming signature detection – are beyond the scope of this article.

The first malware detection technology was based on signatures: segments of code that act as unique identifiers for individual malicious programs. As viruses have evolved, the technologies used to detect them have also become more complex. Advanced technologies (heuristics and behaviour analyzers) can collectively be referred to as ‘nonsignature’ detection methods.

Although the title of this article implies that the entire spectrum of malware detection technologies is covered, it primarily focuses on nonsignature technologies; this is because signatures are primitive and repetitive and there is little to discuss. Furthermore, while signature scanning is widely understood, most users do not have a solid understanding of nonsignature technologies. This article explains the meanings of terms such as “heuristic,” “proactive detection,” “behavioral detection” and “HIPS”, examines how these technologies relate to each another and their advantages and drawbacks. This article, like our previously published The evolution of self-defense technologies in malware, aims to systemize and objectively examine certain issues relating to malicious code and defending systems against malicious programs. Articles in this series are designed for readers who have a basic understanding of antivirus technologies, but who are not experts in the field.

Malware defense systems: a model

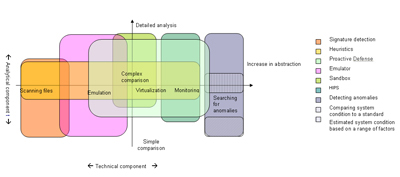

Let’s start by examining how malware detection technologies work using the following model.

Any protection technology can be separated into two components: a technical component and an analytical component. Although these components may not be clearly separate at a module or algorithm level, in terms of function they do differ from each other.

The technical component is a collection of program functions and algorithms that provide data to be analyzed by the analytical component. This data may be file byte code, text strings within a file, a discrete action of a program running within the operating system or a full sequence of such actions.

The analytical component acts as a decision-making system. It consists of an algorithm that analyzes data and then issues a verdict about the data. An antivirus program (or other security software) then acts in accordance with this verdict in line with the program’s security policy: notifying the user, requesting further instructions, placing a file in quarantine, blocking unauthorized program actions, etc.

As an example, let’s use this model to examine classic methods based on signature detection. A system that gets data about the file system, files and file contents acts as the technical component. The analytical component is a simple operation that compares byte sequences. Broadly speaking, the file code is input for the analytical component; the output is a verdict on whether or not that file is malicious.

When using the model above any protection system can be viewed as a complex number – something that connects two separate constituents i.e. the technical and analytical components. Analyzing technologies in this way makes it easy to see how the components relate to one another and their pluses and minuses. In particular, using this model makes it easier to get to the bottom of how certain technologies work. For example, this article will discuss how heuristics as a method for decision-making are simply one type of analytical component, rather than a truly independent technology. And it will consider HIPS (Host Intrusion Prevention System) as just a type of technical component, a way to collect data. These terms do not contradict one another, and they also do not fully characterize the technology that they are used to describe: we can discuss heuristics without specifying exactly what data is undergoing heuristic analysis, and we can talk about an HIPS system without knowing anything about the principles that guide the system in issuing verdicts.

These technologies will be discussed in more detail in their respective sections. Let’s first examine the principles at the heart of any technology used to search for malicious code: technical (methods for gathering data) and analytical (methods for processing the collected data).

The technical component

The technical component of a malware detection system collects data that will be used to analyze the situation.

On one hand, a malicious program is a file containing specific content. On the other hand, it is a collection of actions that take place within an operating system. It is also the sum total of final effects within an operating system. This is why program identification can take place at more than one level: by byte sequence, by action, by the program’s influence on an operating system, etc.

The following are all ways that can be used to collect data for identifying malicious programs:

- treating a file as a mass of bytes

- emulating1 the program code

- launching the program in a sandbox2 (and using other similar virtualization technologies)

- monitoring system events

- scanning for system anomalies

These methods are listed in terms of increased abstraction when working with code. The level of abstraction here means the way in which the program being run is regarded: as an original digital object (a collection of bytes), as a behaviour (more abstract than the collection of bytes) or as a collection of effects within an operating system (more abstract than the behaviour). Antivirus technology has, more or less, evolved along these lines: working with files, working with events via a file, working with a file via events, and working with the environment itself. This is why the list above naturally illustrates chronology as well as methods.

It should be stressed that the methods listed above are not so much separate technologies as they are theoretical stages in the continuing evolution of technologies used to collect data which is used to detect malicious programs. Technologies gradually evolve and intersect with one another. For example, emulation may be closer to point 1 in the list if it is implemented in such a way that only partially handles a file as a mass of bytes. Or it may be closer to point (3) if we are talking about full virtualization of system functions.

The methods are examined in more detail below.

Scanning files

The very first antivirus programs analyzed file code which was treated as byte sequences. Actually, “analyze” is probably not the best term to use, as this method was a simple comparison of byte sequences against known signatures. However, here we are interested in the technical aspect of this technology, namely getting data as part of the search for malicious programs. This data is transmitted to the decision-making component, extracted from files and is a mass of bytes structured in a particular way.

A typical feature of this method is that the antivirus works only with the source byte code of a program and does not take program behaviour into account. Despite the fact that this method is relatively old, it is not out of date, and is used in one way or another by all modern antivirus software – just not as the sole or even as the main method, but as a complement to other technologies.

Emulation

Emulation technology is an intermediary stage between processing a program as a collection of bytes and processing a program as a particular sequence of actions.

An emulator breaks down a program’s byte code into commands, and then launches each commend in a virtual environment which is a copy of the computer environment. This allows security solutions to observe program behavior without any threat being posed to the operating system or user data (which would inevitably happen if the program was run in the real, i.e. non-virtual environment).

An emulator is an intermediary step in terms of levels of abstraction in working with a program. Roughly speaking, we can say that while an emulator still works with a file, it does analyze events. Emulators are used in many (possibly even all) major antivirus products, usually either as an addition to a core, lower-level file engine or as insurance for a higher-level engine (such as a sandbox or system monitoring).

Virtualization: the sandbox

Virtualization as it is used in so-called sandboxes is a logical extension of emulation. The sandbox works with programs that are run in a real environment but the environment is strictly controlled. The name sandbox itself provides a relatively accurate picture of how the technology works. You have an enclosed space in which a child can play safely. In the context of information security, the operating system is the world, and the malicious program is the rambunctious child. The restrictions placed on the child are a set of rules for interaction with the operating system. These rules may include a ban on modifying the operating system’s directory, or restricting work with the file system by partially emulating it. For example, a program that is launched in a sandbox may be fed a virtual copy of a system directory so that modifications made to the directory by the program under investigation do not impact the way the operating system works. Any point of contact between the program and its environment (such as the file system and system registry) can be virtualized in this way.

The line between emulation and virtualization may be a fine one, but it is a clear one. The first technology is an environment in which a program is run (and fully contained and controlled as it runs). The latter uses the operating system as the environment, and the technology merely controls the interaction between the operating system and the program. Unlike emulation, in virtualization the environment is on separate but equal footing with the technology.

Protection using the kind of virtualization described above doesn’t work with the files, but with program behavior – and it doesn’t work the system itself.

Sandboxing – like emulation – isn’t used extensively in antivirus products, mainly because it requires a large amount of resources. It’s easy to tell when an antivirus program uses a sandbox, because there will always be a time delay between when the program is launched and when it actually starts to run (or, if a malicious program is detected, there will be a delay between the program’s launch and the notification announcing a positive detection). At the moment, sandbox engines are used in only a handful of antivirus products. However, a great deal of research is currently being done into hardware virtualization, which may lead to this situation changing in the near future.

Monitoring system events

Monitoring system events is a more abstract method of collecting data which can be used to detect malicious programs. An emulator or sandbox observes each program separately; monitoring technology observes all programs simultaneously by registering all operating system events created by running programs.

Data is collected by intercepting operating system functions. By intercepting the call to a certain system function, information can be obtained about exactly what a certain program is doing in the system. Over time, the monitor collects statistics on these actions and transfers them to the analytical component for analysis.

This technology is currently the most rapidly evolving technology. It is used as a component in several major antivirus products and as the main component in individual system monitoring utilities (called HIPS utilities, or simply HIPS – these include Prevx, CyberHawk and a number of others). However, given that it’s possible to get around any form of protection, this malware detection method is not exactly the most promising: once a program is launched in a real environment, the risks considerably reduce the effectiveness of the protection.

Scanning for system anomalies

This is the most abstract method used to collect data about a possibly infected system. It is included here as it is a logical extension of other methods, and because it demonstrates the highest level of abstraction among the technologies examined in this article.

This method makes use of the following features:

- an operating system, together with the programs running within that system, is an integrated system;

- the operating system has an intrinsic “system status”;

- if malicious code is run in the environment, then the system will have an “unhealthy” status; this differs from a system with a “healthy” status, in which there is no malicious code.

These features help determine a system’s status (and, consequently, whether or not malicious code is present in the system) by comparing the status to a standard or by analyzing all of the system’s individual parameters as a single entity.

In order to detect malicious code effectively using this method, a relatively complex analytical system (such as an expert system or neural network) is required. Many questions arise: what is the definition of “healthy” status? How does it differ from “unhealthy” status? Which discrete parameters can be tracked? How should these parameters be analyzed? Due to its complexity, this technology is still underdeveloped. Signs of its initial stages can be seen in some anti-rootkit utilities, where it makes comparisons with certain system samples taken from a standard (obsolete utilities such as PatchFinder and Kaspersky Inspector), or certain individual parameters (GMER, Rootkit Unhooker).

An interesting metaphor

The analogy of the child which is used in the section on sandboxing can be extended. For example: an emulator is like a nanny that continually watches over a child to make sure s/he doesn’t do anything undesirable. System event monitoring is like a kindergarten teacher who supervises an entire group of children, and system anomaly detection can be compared to giving children full rein while keeping a record of their grades. And in terms of this metaphor, file byte analysis is like family planning, or more precisely, looking for the “twinkle” in a prospective parent’s eye.

And just like children, these technologies are developing all the time.

The analytical component

The degree of sophistication of decision-making algorithms varies. Roughly speaking, decision-making algorithms can be divided into three different categories, although there are many variants that fall between these three categories.

Simple comparison

In this category, a verdict is issued based on the comparison of a single object with an available sample. The result of the comparison is binary (i.e. “yes” or “no”). One example is identifying malicious code using a strict byte sequence. Another higher level example is identifying a suspicious program behavior by a single action taken by that program (such as creating a record in a critical section of the system registry or the autorun folder).

Complex comparison

In this case a verdict is issued based on the comparison of one or several objects with corresponding samples. The templates for these comparisons can be flexible and the results will be probability based. An example of this is identifying malicious code by using several byte signatures, each of which is non-rigid (i.e. individual bytes are not determined). Another higher level example is identifying malicious code by API functions which are called non-sequentially by the malicious code with certain parameters.

Expert systems

In this category, a verdict is issued after a sophisticated analysis or data. An expert system may include elements of artificial intelligence. One example is identifying malicious code not by a strict set of parameters, but by the results of a multifaceted assessment of all of its parameters at once, taking into account the ‘potentially malicious’ weighting of each parameter and calculating the overall result.

Real technologies at work

Let’s now examine exactly which algorithms are used in which malware detection technologies.

Typically, manufacturers give new names to the new technologies they develop (Proactive Protection in Kaspersky Anti-Virus, TruPrevent from Panda, and DeepGuard from F-Secure). This is good as it means that individual technologies will not automatically be pigeon-holed in narrow technical categories. Nevertheless, using more general terms such as “heuristic,” “emulation,” “sandbox,” and “behaviour blocker” is unavoidable when attempting to describe technologies in an accessible, relatively non-technical way.

This is where the tangled web of terminology begins. These terms do not have clear-cut meanings (ideally, there would be one clear definition for each term). One person may interpret a term in a completely different way from someone else. Furthermore, the definitions used by the authors of so-called “accessible descriptions” are often very different from the meanings used by professionals. This explains the fact that descriptions of technologies on developer websites may be crammed with technical terminology while not actually describing how the technology works or giving any relevant information about it.

For example, some antivirus software manufacturers say their products are equipped with HIPS, proactive technology or nonsignature technology. A user may understand “HIPS” as being a monitor that analyzes system events for malicious code, and this may not be correct. This description could mean almost anything e.g. that an emulator engine is equipped with a heuristic analysis system (see below). This kind of situation arises even more often when a solution is described as heuristic without giving any other details.

This is not to say that developers are trying to deceive clients. It’s likely that whoever prepares the description of technologies has simply got the terms confused. This means that descriptions of technologies prepared for end users may not accurately describe how the technology works, and that clients should be cautious if using descriptions when selecting a security solution.

Now let’s take a look at the most common terms in antivirus technologies (see figure 1).

There are few variations in the meanings of signature detection: from a technical perspective, it means working with file byte code, and from an analytical point of view, it is a primitive means of processing data, usually by using simple comparison. This is the oldest technology, but it is also the most reliable. That’s why despite the considerable costs incurred in keeping databases up to date, this technology is still used today in all antivirus software.

There aren’t many possible interpretations of the terms emulator or sandbox, either. In this type of technology the analytical component can be an algorithm of any complexity, ranging from simple comparison to expert systems.

The term heuristic is less transparent. According to Ozhegova-Shvedovaya, the definitive Russian dictionary, “heuristics is a combination of research methods capable of detecting what was previously unknown.” Heuristics are first and foremost a type of analytical component in protection software, but not a clearly defined technology. Outside a specific context, in terms of problem-solving, it closely resembles an “unclear” method used to resolve an unclear task.

When antivirus technologies first began to emerge – which was when the term heuristic was first used – the term meant a distinct technology: one that would identify a virus using several flexibly assigned byte templates, i.e. a system with a technical component, (e.g. working with files), and an analytical component (using complex comparison). Today the term heuristic is usually used in a wider sense to denote technology that is used to search for unknown malicious programs. In other words, when speaking about heuristic detection, developers are referring to a protection system with an analytical component that uses a fuzzy search to find a solution (this could correspond to an analytical component which uses either complex analysis or an expert system (see figure 1). The technological foundation of the protection software i.e. the method it uses to gather data for subsequent analysis can range from simply working with files up to working with events or the status of the operating system.

Behavioral detection and proactive detection are terms which are even less clearly defined. They can refer to a wide variety of technologies, ranging from heuristics to system event monitoring.

The term HIPS is frequently used in descriptions of antivirus technologies, but not always appropriately. Despite the fact that the acronym stands for Host Intrusion Prevention System, this does not reflect the essential nature of the technology in terms of antivirus protection. In this context, the technology is very clearly defined: HIPS is a type of protection which from a technical point of view is based on monitoring system events. The analytical component of the protection software may be of any type, ranging from coinciding separate suspicious events to complex analysis of a sequence of program actions. When used to describe an antivirus product, HIPS may be used to denote a variety of things: primitive protection for a small number of registry keys, a system that provides notification of attempts to access certain directories, a more complex system that analyzes program behaviour or even another type of technology that uses system event monitoring as its basis.

Different malware detection methods: the pros and cons

If we examine technologies that protect against malware as a group rather than individually, and using the model introduced in this article, the following picture emerges.

The technical component of a technology is responsible for features such as how resource-hungry a program is (and as a result, how quickly it works), security and protection.

A program’s resource requirements is the share of processor time and RAM required either continually or periodically to ensure protection. If software requires a lot of resources, it may slow down system performance. Emulators run slowly: regardless of implementation, each emulated instruction will create several instructions in the artificial environment. The same goes for virtualization. System event monitors also slow systems down, but the extent to which they do so depends on the implementation. As far as file detection and system anomaly detection are concerned, the load on the system is also entirely dependent on implementation.

Security is the level of risk which the operating system and user data will be subjected to during the process of identifying malicious code. This risk is always present when malicious code is run in an operating system. The architecture of system event monitors means that malicious code has to be run before it can be detected, whereas emulators and file scanners may detect malicious code before it is executed.

Protection reflects the extent to which a technology may be vulnerable, or how easy it may be for a malicious program to hinder detection. It is very easy to combat file detection: it’s enough to pack a file, make it polymorphic, or use rootkit technology to disguise a file. It’s a little tougher to circumvent emulation, but it is still possible; a virus writer simply has to build any of a range of tricks3 into the malicious program’s code. On the other hand, it’s very difficult for malware to hide itself from a system event monitor, because it’s nearly impossible to mask a behaviour.

In conclusion, the less abstract the form of protection, the more secure it will be. The caveat: the less abstract the form of protection, the easier it will be for malware to circumvent.

The analytical aspect of a technology is responsible for features such as proactivity (and the consequent impact on the necessity for frequent antivirus database updates), the false positive rate and the level of user involvement.

Proactivity refers to a technology’s ability to detect new, as yet unidentified malicious programs. For example, the simplest type of analysis (simple comparison) denotes the least proactive technologies, such as signature detection: such technologies are only able to detect known malicious programs. The more complex an analytical system is, the more proactive it is. Proactivity is directly linked to how frequently updating needs to be conducted. For example, signature databases have to be updated frequently; more complex heuristic systems remain effective for longer, and expert analytical systems can function successfully for months without an update.

The false positive rate is also directly related to the complexity of a technology’s analytical component. If malicious code is detected using a precisely defined signature or sequence of actions, as long as the signature (be it byte, behavioral or other) is sufficiently long, identification will be absolute. The signature will only detect a specific piece of malware, and not other malicious programs. The more programs a detection algorithm attempts to identify, the less clear it becomes, and the probability of detecting non-malicious programs increases as a result.

The level of user involvement is the extent to which a user needs to participate in defining protection policies: creating rules, exceptions and black and white lists. It also reflects the extent to which the user participates in the process of issuing verdicts by confirming or rejecting the suspicions of the analytical system. The level of user involvement depends on the implementation, but as a general rule the further analysis is from a simple comparison, the more false positives there will be that require correction. And correcting false positives requires user input.

In conclusion, the more complex the analytical system, the more powerful the antivirus protection is. However, increased complexity means an increased number of false positives, which can be compensated for by greater user input.

The model described above theoretically makes it easier to evaluate the pros and cons of any technology. Let’s take the example of an emulator with a complex analytical component. This form of protection is very secure (as it does not require the file being scanned to be launched) but a certain percentage of malicious programs will go undetected, either due to anti-emulator tactics used by the malicious code or due to inevitable bugs in the emulator itself. However, this type of protection has great potential and if carefully implemented will detect a high percentage of unknown malicious programs, albeit slowly.

How to choose nonsignature protection

Currently, most security solutions combine several different technologies. Classic antivirus programs often use signature detection in combination with some form of system event monitoring, an emulator and a sandbox. So what should you look for in order to find protection that best suits your specific needs?

First of all, keep in mind that there is no such thing as a universal solution or a ‘best’ solution. Each technology has advantages and drawbacks. For example, monitoring system events constantly takes up a lot of processor time, but this method is the toughest to trick. Malware can circumvent the emulation process by using certain commands in its code, but if those commands are used, the malicious code will be detected preemptively i.e. the system remains untouched. Another example: simple decision-making rules require too much input from the user, who will be required to answer a multitude of questions, whereas more complex decision-making rules, which do not require so much user input, give rise to multiple false positives.

Selecting technologies means choosing the golden mean; that is, picking a solution by taking specific demands and conditions into account. For example, those who work in vulnerable conditions (with an unpatched system, no restrictions on using browser add-ons, scripts, etc.) will be very concerned about security and will have sufficient resources to implement appropriate security measures. A sandbox-type system with a quality analytical component will best suit this kind of user. This type of system offers maximum security, but given current conditions, it will eat up a lot of RAM and processor time, which could slow the operating system beyond acceptable levels. On the other hand, an expert who wants to control all critical system events and protect him/ herself from unknown malicious programs will do well with a real-time system monitor. This kind of system works steadily, but does not overload the operating system, and it requires user input to create rules and exceptions. Finally, a user who either has limited resources or does not want to overload his system with constant monitoring, and who does want the option to create rules, will be best served by simple heuristics. Ultimately, it’s not a single component that ensures quality detection of unknown malicious programs, but the security solution as a whole. A sophisticated decision making method can compensate for more simple technologies.

Nonsignature systems used to detect previously unknown malicious code fall into two categories. The first include stand-alone HIPS systems, such as Prevx and Cyberhawk. The second group includes leading antivirus products, which in their continued evolution towards greater effectiveness have come to use nonsignature technologies. The advantages of one over the other are obvious: the first category offers a highly dedicated solution which has unlimited potential for improvement in terms of quality. The second makes use of the wealth of experience stemming from the multi-faceted battle against malicious programs.

In choosing a new product, the best recommendation is to trust personal impressions and independent test results.

Links

Independent antivirus tests:

- AV-Comparatives

- West Coast Labs (Checkmark Certification)

- AV-Test

- ICSA Labs

- Virus Bulletin

- Anti-Malware

Independent HIPS tests:

- Tech Support Alert

- Lycos

- AV-Comparatives: http://www.av-comparatives.org/seiten/ergebnisse/HIPS-BB-SB.pdf

1 Emulation is an imitation of how one system works using the resources of another without losing the functional capabilities and without distorting the results. Emulation is performed by program and/or device resources.

2 A sandbox is an environment in which it is safe to run a program that is built on partial or full restrictions or emulation of operating system resources. see also http://en.wikipedia.org/wiki/Sandbox.

3 Techniques used to counteract emulation are based on the fact that emulators may react differently to certain commands than the processor does This allows a malicious program to detect emulation and perform certain actions – for example, it may act based on an alternative algorithm.

The evolution of technologies used to detect malicious code