Introduction

The way threat actors use post-exploitation frameworks in their attacks is a topic we frequently discuss. It’s not just about analysis of artifacts for us, though. Our company’s deep expertise means we can study these tools to implement best practices in penetration testing. This helps organizations stay one step ahead.

Being experts in systems security assessment and information security in general, we understand that a proactive approach always works better than simply responding to incidents that have already occurred. And when we say “proactive”, we imply learning new technologies and techniques that threat actors may adopt next. That is why we follow the latest research, analyze new tools, and advance our pentesting expertise.

This report describes how our pentesters are using a Mythic framework agent. The text is written for educational purposes only and intended as an aid for security professionals who are conducting penetration testing with the system owner’s consent.

It’s worth noting that Kaspersky experts assign a high priority to the detection of the tools and techniques described in this article as well as many similar others employed by threat actors in real-world attacks.

These efforts to counter malicious actors use solutions like Kaspersky Endpoint Security that utilize the technologies listed below.

- Behavioral analysis tracks processes running in the operating system, detects malicious activity, providing added security for critical OS components such as the Local Security Authority Subsystem Service process.

- Exploit prevention stops threat actors from taking advantage of vulnerabilities in installed software and the OS itself.

- Fileless threats protection detects and blocks threats that, instead of residing in the file system as traditional files, exist as scheduled tasks, WMI subscriptions, and so on.

- There are many others too.

However, it’s worth noting that since our study discusses a sophisticated attack controlled directly by a malicious actor (or a pentester), more robust defense calls for a layered approach to security. This must incorporate security tools to help SOC experts quickly detect malicious activity and respond in real time.

These include Endpoint Detection and Response, Network Detection and Response and Extended Detection and Response solutions as well as Managed Detection and Response services. They provide continuous monitoring and response to potential incidents. Usage of threat intelligence to acquire up-to-date and relevant information about attacker tactics and techniques is another cornerstone of comprehensive defense against sophisticated threats and targeted attacks.

This study is the product of our exploration and analysis: how we as defenders can best prepare and what we should expect. What follows is part one of the report in which we compare pentesting tools and choose the option that suits the objectives of our study. Part two deals with how to communicate with the chosen framework and achieve our objectives.

Pentester tools: how to choose

An overview of ready-made solutions

Selecting pentesting tools can prove a challenging task. Few pentesters can avoid detection by EPP or EDR solutions. As soon as a pentesting tool gains popularity among attackers, defensive technologies begin detecting not only its behavior, but also its individual components. Besides, the ability to detect the tool becomes a key performance indicator for these technologies. As a result, pentesters have to spend more time preparing for a project.

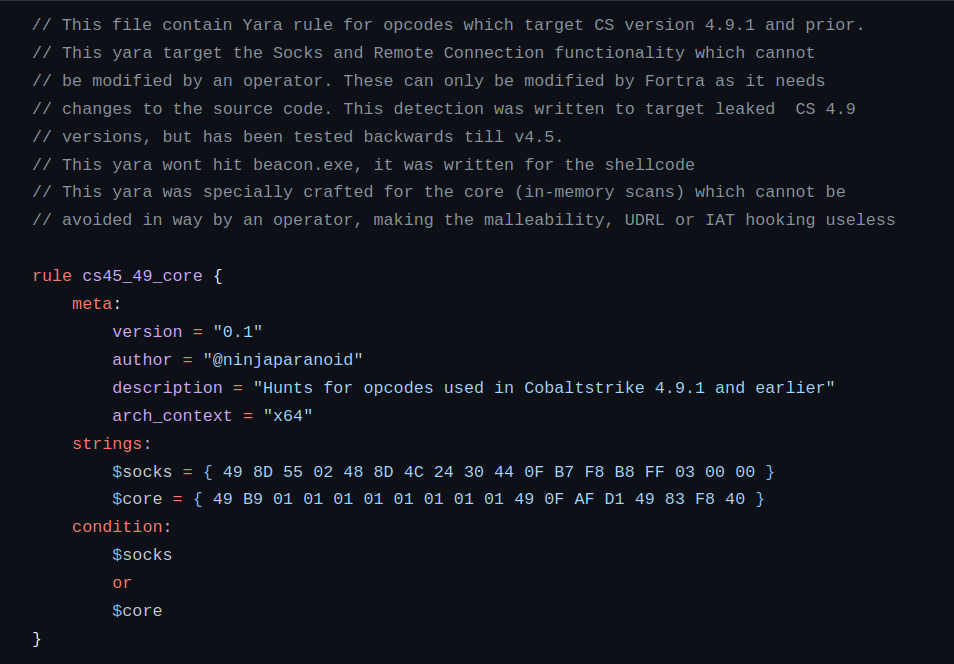

At the same time, many existing solutions have flaws that impede pentesting. Ethical hackers, for example, frequently use Cobalt Strike. The Beacon agent uses a specific opcode sequence in platform version 4.9.1. To avoid detection by security solutions, opcodes must be changed, but that breaks the agent.

Another example is Metasploit’s Meterpreter payload, whose signatures appear in Microsoft’s antivirus database more than 230 times, making the tool significantly more difficult to use in projects.

The Sliver framework is an open-source project. It is in active development, and it can handle pentesting tasks. However, this project has a number of drawbacks, too.

- The size of a payload generated by the framework is 8–9 megabytes. This reduces flexibility because the ideal size of a pentesting agent that ensures versatility is about 100 KB.

- Stability issues. We’ve seen active sessions drop. The framework once lacked support for automatically using a proxy server from the Windows configuration, which also complicated its use. This has since been addressed.

The Havoc framework and its Demon payload are currently gaining popularity: both are evolving, and both support evasion techniques. However, the framework currently suffers from a lack of compliance with operational security (OPSEC) principles and stability issues. Additionally, payload customization in Havoc is limited by rigid parameters.

As you can see, we cannot fully rely on open-source projects for pentesting due to their significant shortcomings. On the other hand, creating tools from scratch would require extra resources, which is inefficient. So, it’s crucial to strike the right balance between building in-house solutions and leveraging open-source projects.

Payload structure

First, let’s define what kind of payload is required for pentesting. We had decided to split it into three modules: Stage 0, Stage 1 and Stage 2. The first module, Stage 0, creates and runs the payload. It must generate an artifact, such as a shellcode, a DLL or EXE file, or a VBA script, and provide maximum flexibility by offering customizable parameters for running the payload. This module also handles the circumvention of security measures and monitors the runtime environment.

The second module (Stage 1) must allow the operator to examine the host, perform initial reconnaissance, and then use that information to establish persistence via a payload maintaining covert communications. After successfully establishing persistence, this module must launch the third module (Stage 2) to perform further activities such as lateral movement, privilege escalation, data exfiltration, and credential harvesting.

The Stage 0 module has to be written from scratch, as available tools quickly get detected by security systems and become useless for penetration testing. To implement the Stage 1 module, we settled on a hybrid approach: partially modifying existing open-source projects while implementing some features in-house. For the third module (Stage 2), we also used open-source projects with minor modifications.

This article details the implementation of the second module (Stage 1) in detail.

Formulating requirements

In light of the objectives outlined above, we will formulate the requirements for the Stage 1 module.

- Dynamic functionality, or modularity, for increased resilience. In addition, dynamic configuration allows adding techniques via new modules without changing the functional core.

- Ensuring that the third payload module (Stage 2) runs.

- Minimal size (100–200 KB) and minimal traces left in the system.

- The module must comply with OPSEC principles and allow operations to run undetected by security controls. This means we must provide a mechanism for evading signature-based memory scanning.

- Employing non-standard (hidden) communication channels, outside of HTTP and TCP, to establish covert persistence and avoid network detection.

Choosing the best solution

While defining the requirements, we recognized the need for a modular design. To begin, we need to determine the best way to add new features while running the tasks. One widely used method for dynamically adding functionality is reflective DLL injection, introduced in 2008. This type of injection has both its upsides and downsides. The ReflectiveLoader function is fairly easy to detect, so we’d need a custom implementation for a dynamic configuration. This is an effective yet costly way of achieving modularity, so we decided to keep looking.

The PowerShell Empire framework, whose loader is based on reflective PowerShell execution, gained popularity in the mid-2010s. The introduction of strict monitoring and rigid policies surrounding PowerShell marked the end of its era, with .NET assemblies, executed reflectively using the Assembly.Load method, gaining popularity. Around this time, toolkits like SharpSploit and GhostPack emerged. Cobalt Strike’s execute-assembly feature, introduced in 2018, allowed for .NET assembly injection into a newly created process. Process creation followed by injection is a strong indicator of compromise and is subject to rigorous monitoring. Injecting code requires considerable planning and tailored resources, plus it’s easily detectable. It’s best used after you’ve already performed initial reconnaissance and established persistence.

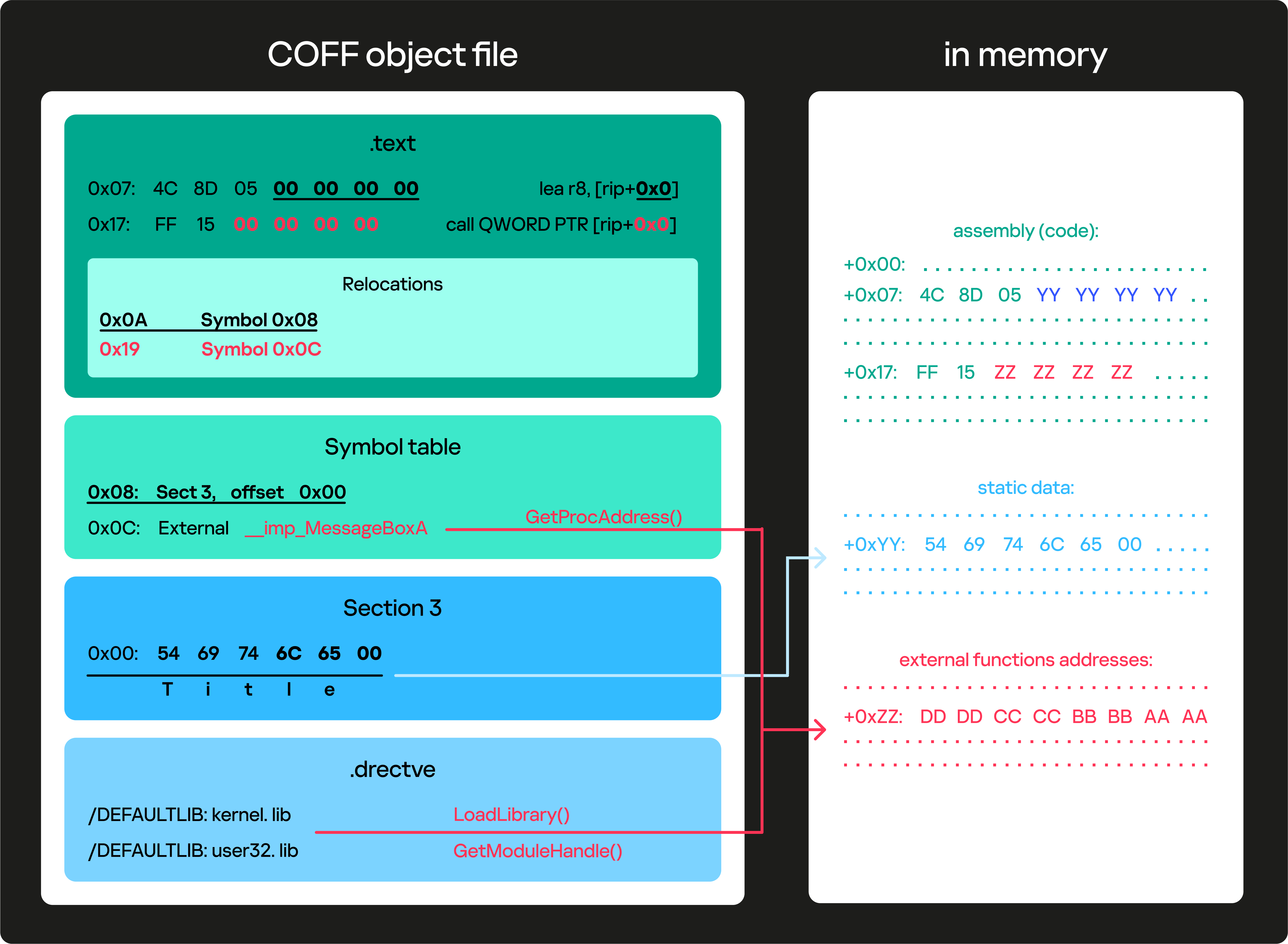

The next stage of framework evolution is the execution of object files in memory. An object file (COFF, Common Object File Format) is a file that represents a compiled version of the source code. Object files are typically not full-fledged programs: they are needed to link and build a project. An object file includes several important elements ensuring that the executable code functions correctly.

- Header contains information about the architecture, timestamp, number of sections and symbols, and other metadata.

- Sections are blocks that may include assembly code, debugging information, linker directives, exception information, and static data.

- Symbol table contains functions and variables, and information about their location in memory.

Using object files allows you to avoid loading a CLR environment into the process, such as when using a .NET assembly and the Assembly.Load method.

Moreover, COFF is executed in the current context, without the need to create a process and inject the code into it. The feature was introduced and popularized in 2020 by the developers of the Cobalt Strike framework. And in 2021, TrustedSec developed the open-source COFF Loader that serves the same purpose: the tool loads a COFF file from disk and runs it. This functionality perfectly aligns with our objectives because it enables us to perform the required actions: surveying, gaining persistence within the system and initiating the next module via an object file – if we incorporate network retrieval and in-memory execution of the file in the project. In addition, when using COFF Loader, the pentester can remain undetected in the system for a long time.

To interact with the agent in this study, we decided to use BOFs (Beacon Object Files) designed for Cobalt Strike Beacon. The internet offers a wide variety of open-source tools and functions created for BOFs. By using different BOFs as separate modules, we can easily add new techniques at any time without modifying the agent’s core.

Another key requirement for Stage 1 is a minimal payload size. Several approaches can achieve this: for instance, using C# can result in a Stage 1 size of around 20 KB. This is quite good, but the payload will then have a dependency on the .NET framework. If we use a native language like C, the unencrypted payload will be approximately 50 KB, which fits our needs.

Our payload requirements are supported by the Mythic framework. Its microservice architecture makes it easy to add arbitrary server-side functionality. For example, the module assembly process takes place inside a container and is fully defined by us. This allows us to replace specific strings with arbitrary values if detected. Furthermore, Mythic supports both standard communication protocols (HTTPS, TCP) and covert channels, such as encrypted communication over Slack or Telegram. Finally, the use of C ensures a small payload size. All of these factors make the Mythic framework and the agent interacting with it to execute BOFs an optimal choice for launching the second module.

Communication model

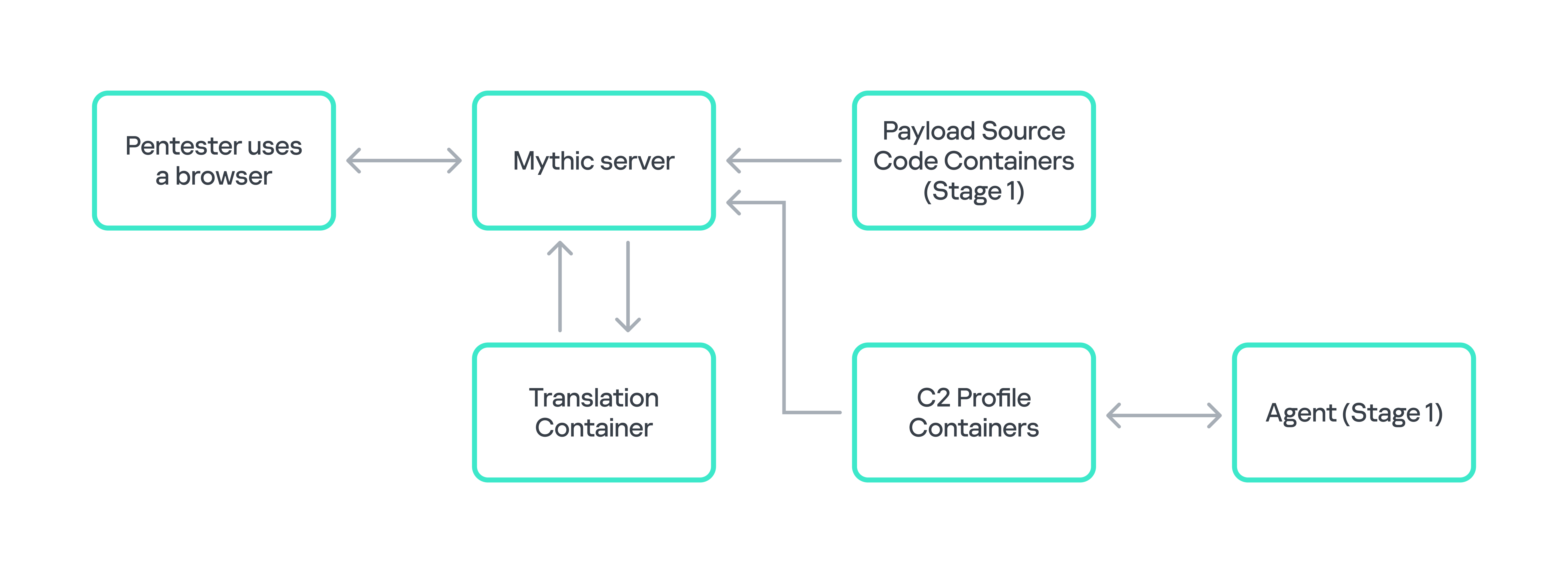

In the communication process between the agent and the framework, we need to focus on three elements: payload containers, C2 profile containers, and the translation container. Payload containers hold the agent’s source code and are responsible for building the payload. C2 profile containers are responsible for communicating with the agent. They must receive traffic from the agent and send it to Mythic for further processing. The translation container handles the encryption and decryption of network traffic. We’ll be using HTTP when interacting with Mythic, so the C2 profile will be a web server listening on ports 80 and 443.

Loading an object file

To load and execute an object file, the agent must read the .text section and replace all zeros with relative addresses of external functions and static data. This is known as symbol relocation, which addresses references within a particular section of the object file. Furthermore, the agent places these symbols in memory, for example, after the code section.

To find external functions, we’ll have to analyze the libraries specified in the linker directives of the object file. To do this, we used the functions LoadLibrary, GetModuleHandle and GetProcAddress.

The diagram below clarifies how an object file is loaded and memory is allocated for its components.

The downsides of the solution

The method described above has a number of shortcomings. Because object file execution is blocking, multiple tasks cannot run simultaneously. For long-term tasks, other methods such as process injection are necessary; however, this is not a critical flaw for the second module, as it is not intended for long-running tasks.

Several other shortcomings are difficult to mitigate. For example, since the object file is executed in the current thread, a critical error will terminate the process. Furthermore, during the execution of the object file in memory, the VirtualAlloc function is used for section mapping and relocation. A call to this WinAPI might alert the security system.

Implementing additional functionality during development and compilation can help complicate analysis and detection for more efficient pentesting and a longer agent life cycle.

Conclusion

Mythic’s features make it a convenient pentesting tool that covers the bulk of pentesting objectives. To utilize this framework efficiently, we created an agent that extends ready-made solutions with our own code. This configuration gave us suitable flexibility and enhanced protection against detection, which is most of what a pentester asks of a working tool.

Using a Mythic agent to optimize penetration testing