In the book The Hitchhiker’s Guide to the Galaxy there’s a character called the Babel fish, which is curiously able to translate into and from any language. Now, in the present-day world, the global cybersecurity industry speaks one language – English; however, sometimes you really do wish there was such a thing as a Babel fish to be able to help customers understand the true meaning of the marketing messages of certain vendors.

Here’s a fresh example.

Earlier this month the independent testing lab AV-Comparatives simultaneously conducted two tests of cybersecurity products using one and the same methodology. The only differences between the two tests were (i) in the line-ups of participating products in each; and (ii) in the names of the tests themselves: Comparative Test of Business Security Products and Comparison of ‘Next-Generation’ Security Products.

Strange? A little. So let me tell you what’s afoot here: why these practically identical tests were conducted at the same time.

It’s well-known already (to folks interested in IT security) how some cybersecurity vendors try to avoid open, public testing and comparisons with other products – so as not to expose their inadequacy. But by not taking part in such tests the marketing machinery of these vendors loses a crucial bit ton of leverage: all potential customers – mostly corporate ones – always consult independent tests run by dependable specialist organizations. So, what were they to do? A solution was found: to join up with other ‘next-gen’ developers to be tested together and separately (no ‘traditional AV’ allowed!), to hide behind a convenient methodology, and coat it all with the BS buzz term ‘next generation’.

Days after the testing the ‘next-gen’ participants published their own interpretations of the results based on dubious logical deduction, manipulation of figures, and biased marketing rhetoric. And you guessed it – those interpretations brought them all to the same conclusion, that ~ “here, finally, it’s been publicly proven how next-gen reigns supreme over traditional products”!

Really? Ok, time we turned on the Babel fish…

Is it really true that next-gen products are great? And if so great… – great compared to what? Let’s compare the results of the ‘next-gen’ test with the above-mentioned twin-test – i.e., the same test (using the exact same methodology), only with different (non ‘next-gen’) participating products.

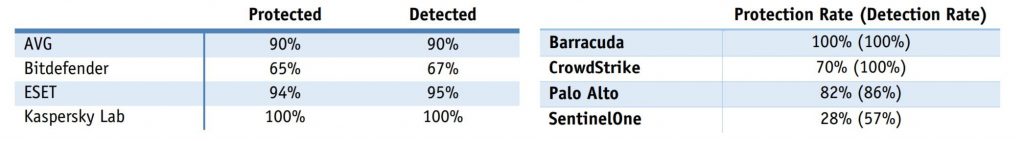

Important: the true quality of protection should be judged by the figure outside the brackets that corresponds to protection rate, not detection rate, since there’s no point in just detecting attacks but still then letting them take place, i.e., not stopping them.

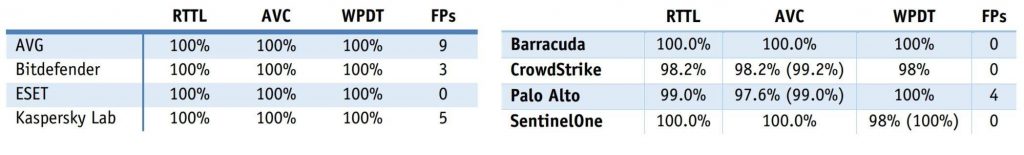

Protection from malware in different scenarios and false positives:

Protection against exploits:

Well, I can hear how the clanging of medals in the next-generation camp seems to have come to a sudden halt, while their ‘victorious’ self-published reports can now be seen for what they really are: mere attempts to intentionally deceive users ‘in the best traditions of misleading test marketing‘.

Judge for yourself:

One participant in its press release appears to have forgotten to tell anyone about its bombing on protection from exploits (28%), while also seeming to have switched its results on the protection rate in the WPDT scenario (100% instead of 98%).

Another participant also kept quiet about its modest result on protection from exploits (82%), but proudly called its… last-but-one place in the contest in this category as “…outperform[ing] other endpoint security competitors in exploit protection”. It also preferred not to mention its coming last in the AVC scenario test, but that didn’t stop it claiming that mythical ‘legacy AV’ (whatever that is) simply MUST be replaced by its products.

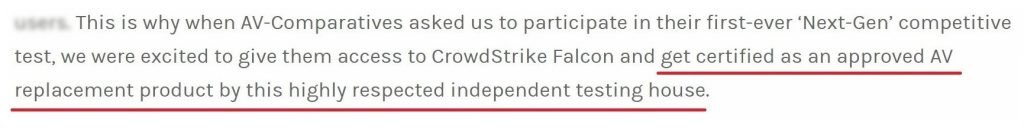

A third participant decided to get straight to the point by laying claim to the crown of the ‘most next-gen of all’, having received, nothing short of a blessing certification from this test lab to replace mythical ‘legacy AV’ with its next-gen products:

The Babel fish has a few other questions regarding this test.

The methodology used this time for testing protection against malicious programs was simpler than that used in the regular full-fledged Real World Protection Test by which other (non-‘next-gen’) products are normally certified. In the Real World Protection Test, each month for a year six times more real cyberattack scenarios (WPDT) are used. And even adding RTTL and AVC scenarios doesn’t make up for this simplification.

So why was simplification of the methodology and a division of the participants (into ‘next-gen’ and ‘business’) needed? Was it an indulgence to the next-gen vendors, which were afraid of flopping big-time on regular tests? How well would these developers do in a full-fledged test together with the technological leaders?

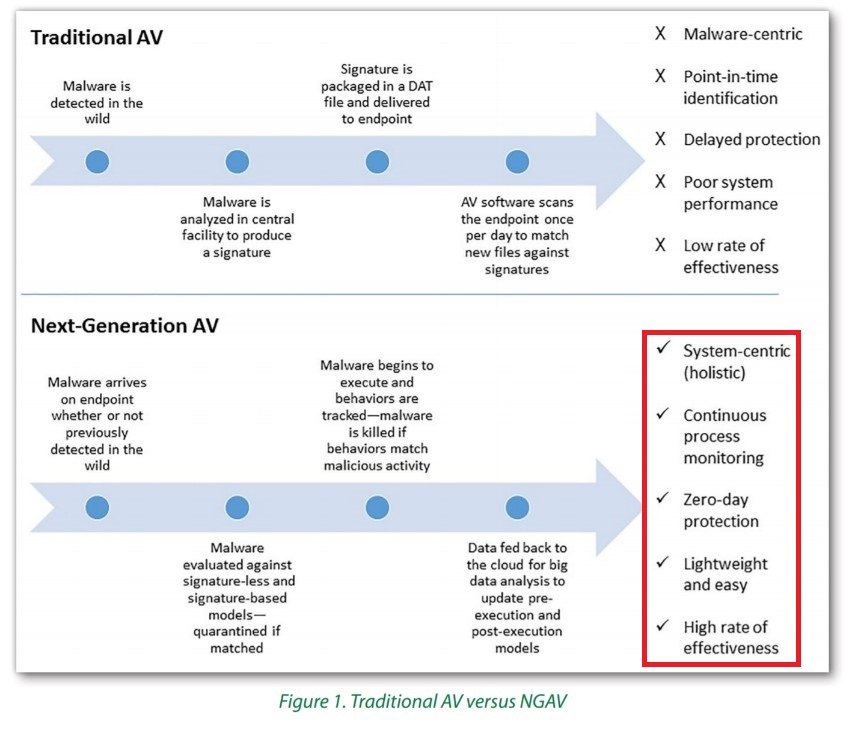

And the last question: what is ‘next generation’?

According to a comprehensive study by the SANS Institute conducted at the request of another self-proclaimed ‘next-gen’ vendor, the category ‘Next-generation AV’ covers all large vendors of cybersecurity solutions. Moreover, many ‘next-gen’ vendors do not qualify for the ‘Next-generation AV’ tag – especially when it comes to the level of effectiveness and protection from zero-day threats:

I can’t say that I fully agree with above mentioned definition: absent from it are such important things as multi-level protection, adaptability, and the ability to not only detect but also prevent, react to and predict cyberattacks, which are all much more important for the user. However, even this definition unequivocally states that all products need to be tested as per one and the same methodology.

Take-Aways:

First, (in spite of everything): I want to express my thanks to AV-Comparatives for finally being able to conduct a public test of several ‘next-gen’ products. Ok, so the methodology used was WPDT-lite, and the test results can’t be used to directly compare participants. Still, as they say, you can’t have everything straight away – or – the first step is always the most difficult/crucial: the main thing is that ‘next-gen’ has finally been publically tested by an authoritative independent lab, which is just what we’d been wanting for a long time.

Second: I hope that other independent test labs will follow AV-Comparatives’ example in testing ‘next-gen’ – preferably as per AMTSO standards – and, crucially, together with all vendors. And I hope the vendors in turn, won’t throw obstacles in the test labs’ way.

Third: When choosing a cybersecurity solution it’s necessary to take into account as many different tests as possible. Reliable products set themselves apart by constantly notching up stable top results in different tests by different independent labs over many years.

And finally: Now, in the nick of time for the planning of budgets for next year, I hope ‘next-gen’ developers will allocate more resources to the development of technologies and participation in public tests, rather than on fancy advertising billboards, planned inaccuracies in press-releases, and expensive parties stuffed with celebrities.

‘Next-gen’ security products manipulate public tests

Tweet

PS – from Babel fish:

“The word combination ‘next-generation security’ and its derivations in public communications – be they marketing material, advertising videos, white papers, or the arguments of a sales manager – can be a sign of aggressive telepathic matrixes directed at the promotion of pure BS, and thus necessitate a particularly astringent practical application of critical reason.”

From the author:

“I understood none of that, but fully agree with the fish – whatever it was it was babbling on about.”

Lost in Translation, or the Peculiarities of Cybersecurity Tests